Pathfinder Variational Inference#

Pathfinder [Zhang et al., 2021] is a variational inference algorithm that produces samples from the posterior of a Bayesian model. It compares favorably to the widely used ADVI algorithm. On large problems, it should scale better than most MCMC algorithms, including dynamic HMC (i.e. NUTS), at the cost of a more biased estimate of the posterior. For details on the algorithm, see the arxiv preprint.

This algorithm is implemented in BlackJAX, a library of inference algorithms for JAX. Through PyMC’s JAX-backend (through pytensor) we can run BlackJAX’s pathfinder on any PyMC model with some simple wrapper code.

This wrapper code is implemented in pymc-experimental. This tutorial shows how to run Pathfinder on your PyMC model.

You first need to install pymc-experimental:

pip install git+https://github.com/pymc-devs/pymc-experimental

Instructions for installing other packages:

import arviz as az

import numpy as np

import pymc as pm

import pymc_experimental as pmx

print(f"Running on PyMC v{pm.__version__}")

Running on PyMC v5.0.1

First, define your PyMC model. Here, we use the 8-schools model.

# Data of the Eight Schools Model

J = 8

y = np.array([28.0, 8.0, -3.0, 7.0, -1.0, 1.0, 18.0, 12.0])

sigma = np.array([15.0, 10.0, 16.0, 11.0, 9.0, 11.0, 10.0, 18.0])

with pm.Model() as model:

mu = pm.Normal("mu", mu=0.0, sigma=10.0)

tau = pm.HalfCauchy("tau", 5.0)

theta = pm.Normal("theta", mu=0, sigma=1, shape=J)

theta_1 = mu + tau * theta

obs = pm.Normal("obs", mu=theta, sigma=sigma, shape=J, observed=y)

Next, we call pmx.fit() and pass in the algorithm we want it to use.

with model:

idata = pmx.fit(method="pathfinder")

/Users/reshamashaikh/miniforge3/envs/pymc-dev/lib/python3.11/site-packages/pymc/sampling/jax.py:39: UserWarning: This module is experimental.

warnings.warn("This module is experimental.")

Running pathfinder...

/Users/reshamashaikh/miniforge3/envs/pymc-dev/lib/python3.11/site-packages/pymc/model.py:980: FutureWarning: Model.RV_dims is deprecated. User Model.named_vars_to_dims instead.

warnings.warn(

Transforming variables...

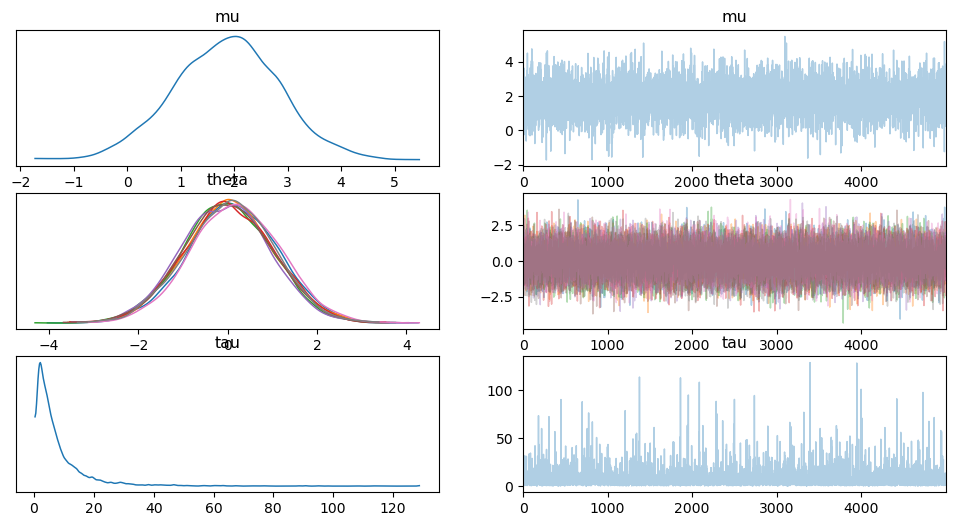

Just like pymc.sample(), this returns an idata with samples from the posterior. Note that because these samples do not come from an MCMC chain, convergence can not be assessed in the regular way.

az.plot_trace(idata);

References#

Lu Zhang, Bob Carpenter, Andrew Gelman, and Aki Vehtari. Pathfinder: parallel quasi-newton variational inference. arXiv preprint arXiv:2108.03782, 2021.

Watermark#

%load_ext watermark

%watermark -n -u -v -iv -w -p xarray

Last updated: Sun Feb 05 2023

Python implementation: CPython

Python version : 3.11.0

IPython version : 8.8.0

xarray: 2022.12.0

pymc : 5.0.1

numpy : 1.24.1

arviz : 0.14.0

pymc_experimental: 0.0.1

Watermark: 2.3.1

License notice#

All the notebooks in this example gallery are provided under the MIT License which allows modification, and redistribution for any use provided the copyright and license notices are preserved.

Citing PyMC examples#

To cite this notebook, use the DOI provided by Zenodo for the pymc-examples repository.

Important

Many notebooks are adapted from other sources: blogs, books… In such cases you should cite the original source as well.

Also remember to cite the relevant libraries used by your code.

Here is an citation template in bibtex:

@incollection{citekey,

author = "<notebook authors, see above>",

title = "<notebook title>",

editor = "PyMC Team",

booktitle = "PyMC examples",

doi = "10.5281/zenodo.5654871"

}

which once rendered could look like: