Variational Inference: Bayesian Neural Networks#

Current trends in Machine Learning#

Probabilistic Programming, Deep Learning and “Big Data” are among the biggest topics in machine learning. Inside of PP, a lot of innovation is focused on making things scale using Variational Inference. In this example, I will show how to use Variational Inference in PyMC to fit a simple Bayesian Neural Network. I will also discuss how bridging Probabilistic Programming and Deep Learning can open up very interesting avenues to explore in future research.

Probabilistic Programming at scale#

Probabilistic Programming allows very flexible creation of custom probabilistic models and is mainly concerned with inference and learning from your data. The approach is inherently Bayesian so we can specify priors to inform and constrain our models and get uncertainty estimation in form of a posterior distribution. Using MCMC sampling algorithms we can draw samples from this posterior to very flexibly estimate these models. PyMC, NumPyro, and Stan are the current state-of-the-art tools for consructing and estimating these models. One major drawback of sampling, however, is that it’s often slow, especially for high-dimensional models and large datasets. That’s why more recently, variational inference algorithms have been developed that are almost as flexible as MCMC but much faster. Instead of drawing samples from the posterior, these algorithms instead fit a distribution (e.g. normal) to the posterior turning a sampling problem into and optimization problem. Automatic Differentation Variational Inference [Kucukelbir et al., 2015] is implemented in several probabilistic programming packages including PyMC, NumPyro and Stan.

Unfortunately, when it comes to traditional ML problems like classification or (non-linear) regression, Probabilistic Programming often plays second fiddle (in terms of accuracy and scalability) to more algorithmic approaches like ensemble learning (e.g. random forests or gradient boosted regression trees.

Deep Learning#

Now in its third renaissance, neural networks have been making headlines repeatedly by dominating almost any object recognition benchmark, kicking ass at Atari games [Mnih et al., 2013], and beating the world-champion Lee Sedol at Go [D. Silver, 2016]. From a statistical point, Neural Networks are extremely good non-linear function approximators and representation learners. While mostly known for classification, they have been extended to unsupervised learning with AutoEncoders [Kingma and Welling, 2014] and in all sorts of other interesting ways (e.g. Recurrent Networks, or MDNs to estimate multimodal distributions). Why do they work so well? No one really knows as the statistical properties are still not fully understood.

A large part of the innoviation in deep learning is the ability to train these extremely complex models. This rests on several pillars:

Speed: facilitating the GPU allowed for much faster processing.

Software: frameworks like PyTorch and TensorFlow allow flexible creation of abstract models that can then be optimized and compiled to CPU or GPU.

Learning algorithms: training on sub-sets of the data – stochastic gradient descent – allows us to train these models on massive amounts of data. Techniques like drop-out avoid overfitting.

Architectural: A lot of innovation comes from changing the input layers, like for convolutional neural nets, or the output layers, like for MDNs.

Bridging Deep Learning and Probabilistic Programming#

On one hand we have Probabilistic Programming which allows us to build rather small and focused models in a very principled and well-understood way to gain insight into our data; on the other hand we have deep learning which uses many heuristics to train huge and highly complex models that are amazing at prediction. Recent innovations in variational inference allow probabilistic programming to scale model complexity as well as data size. We are thus at the cusp of being able to combine these two approaches to hopefully unlock new innovations in Machine Learning. For more motivation, see also Dustin Tran’s blog post.

While this would allow Probabilistic Programming to be applied to a much wider set of interesting problems, I believe this bridging also holds great promise for innovations in Deep Learning. Some ideas are:

Uncertainty in predictions: As we will see below, the Bayesian Neural Network informs us about the uncertainty in its predictions. I think uncertainty is an underappreciated concept in Machine Learning as it’s clearly important for real-world applications. But it could also be useful in training. For example, we could train the model specifically on samples it is most uncertain about.

Uncertainty in representations: We also get uncertainty estimates of our weights which could inform us about the stability of the learned representations of the network.

Regularization with priors: Weights are often L2-regularized to avoid overfitting, this very naturally becomes a Gaussian prior for the weight coefficients. We could, however, imagine all kinds of other priors, like spike-and-slab to enforce sparsity (this would be more like using the L1-norm).

Transfer learning with informed priors: If we wanted to train a network on a new object recognition data set, we could bootstrap the learning by placing informed priors centered around weights retrieved from other pre-trained networks, like GoogLeNet [Szegedy et al., 2014].

Hierarchical Neural Networks: A very powerful approach in Probabilistic Programming is hierarchical modeling that allows pooling of things that were learned on sub-groups to the overall population (see Hierarchical Linear Regression in PyMC3). Applied to Neural Networks, in hierarchical data sets, we could train individual neural nets to specialize on sub-groups while still being informed about representations of the overall population. For example, imagine a network trained to classify car models from pictures of cars. We could train a hierarchical neural network where a sub-neural network is trained to tell apart models from only a single manufacturer. The intuition being that all cars from a certain manufactures share certain similarities so it would make sense to train individual networks that specialize on brands. However, due to the individual networks being connected at a higher layer, they would still share information with the other specialized sub-networks about features that are useful to all brands. Interestingly, different layers of the network could be informed by various levels of the hierarchy – e.g. early layers that extract visual lines could be identical in all sub-networks while the higher-order representations would be different. The hierarchical model would learn all that from the data.

Other hybrid architectures: We can more freely build all kinds of neural networks. For example, Bayesian non-parametrics could be used to flexibly adjust the size and shape of the hidden layers to optimally scale the network architecture to the problem at hand during training. Currently, this requires costly hyper-parameter optimization and a lot of tribal knowledge.

Bayesian Neural Networks in PyMC#

Generating data#

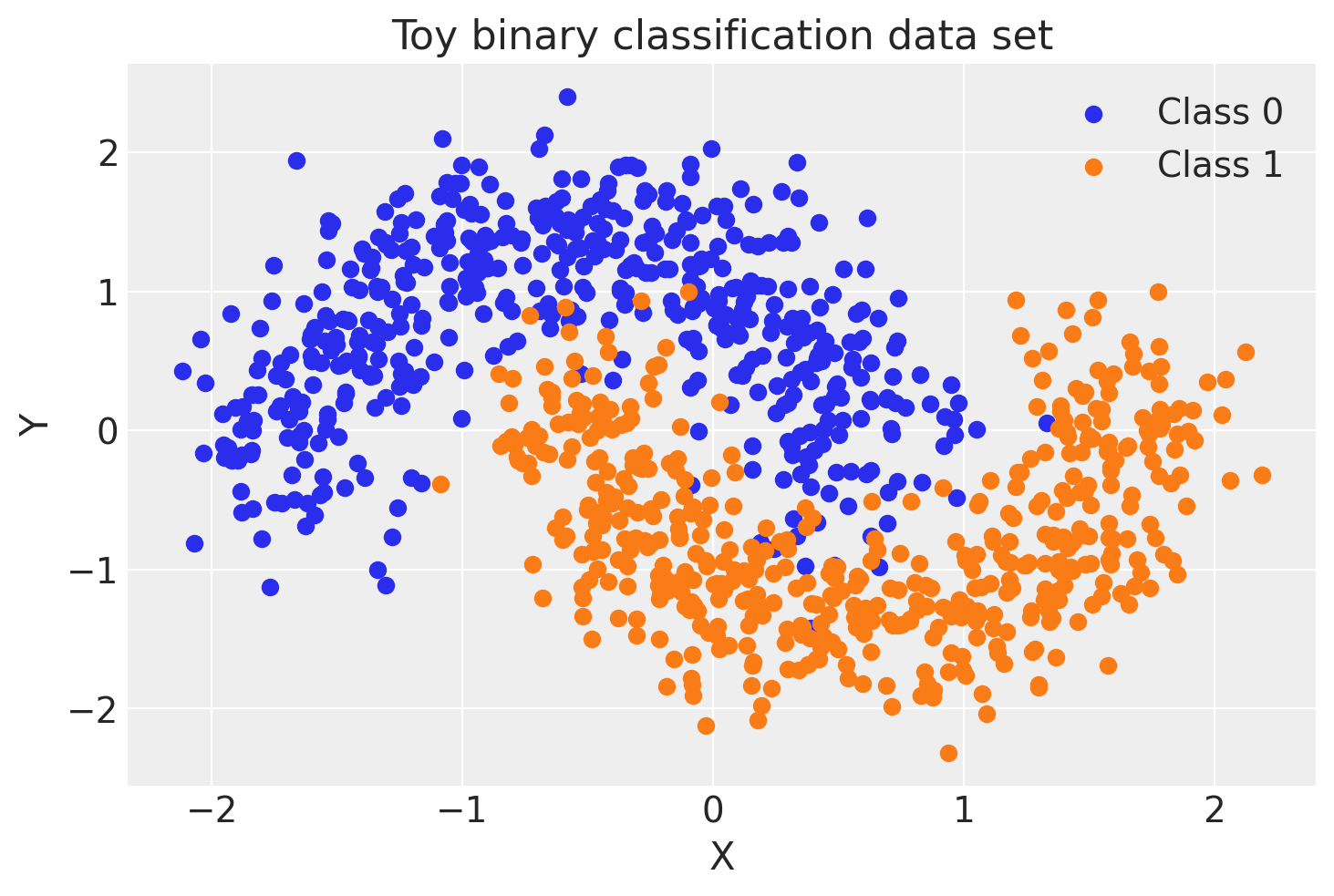

First, lets generate some toy data – a simple binary classification problem that’s not linearly separable.

import arviz as az

import matplotlib.pyplot as plt

import numpy as np

import pymc as pm

import pytensor

import pytensor.tensor as pt

import seaborn as sns

from sklearn.datasets import make_moons

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import scale

WARNING (pytensor.tensor.blas): Using NumPy C-API based implementation for BLAS functions.

%config InlineBackend.figure_format = 'retina'

floatX = pytensor.config.floatX

RANDOM_SEED = 9927

rng = np.random.default_rng(RANDOM_SEED)

az.style.use("arviz-darkgrid")

X, Y = make_moons(noise=0.2, random_state=0, n_samples=1000)

X = scale(X)

X = X.astype(floatX)

Y = Y.astype(floatX)

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.5)

fig, ax = plt.subplots()

ax.scatter(X[Y == 0, 0], X[Y == 0, 1], color="C0", label="Class 0")

ax.scatter(X[Y == 1, 0], X[Y == 1, 1], color="C1", label="Class 1")

sns.despine()

ax.legend()

ax.set(xlabel="X", ylabel="Y", title="Toy binary classification data set");

Model specification#

A neural network is quite simple. The basic unit is a perceptron which is nothing more than logistic regression. We use many of these in parallel and then stack them up to get hidden layers. Here we will use 2 hidden layers with 5 neurons each which is sufficient for such a simple problem.

def construct_nn(ann_input, ann_output):

n_hidden = 5

# Initialize random weights between each layer

init_1 = rng.standard_normal(size=(X_train.shape[1], n_hidden)).astype(floatX)

init_2 = rng.standard_normal(size=(n_hidden, n_hidden)).astype(floatX)

init_out = rng.standard_normal(size=n_hidden).astype(floatX)

coords = {

"hidden_layer_1": np.arange(n_hidden),

"hidden_layer_2": np.arange(n_hidden),

"train_cols": np.arange(X_train.shape[1]),

# "obs_id": np.arange(X_train.shape[0]),

}

with pm.Model(coords=coords) as neural_network:

ann_input = pm.Data("ann_input", X_train, mutable=True, dims=("obs_id", "train_cols"))

ann_output = pm.Data("ann_output", Y_train, mutable=True, dims="obs_id")

# Weights from input to hidden layer

weights_in_1 = pm.Normal(

"w_in_1", 0, sigma=1, initval=init_1, dims=("train_cols", "hidden_layer_1")

)

# Weights from 1st to 2nd layer

weights_1_2 = pm.Normal(

"w_1_2", 0, sigma=1, initval=init_2, dims=("hidden_layer_1", "hidden_layer_2")

)

# Weights from hidden layer to output

weights_2_out = pm.Normal("w_2_out", 0, sigma=1, initval=init_out, dims="hidden_layer_2")

# Build neural-network using tanh activation function

act_1 = pm.math.tanh(pm.math.dot(ann_input, weights_in_1))

act_2 = pm.math.tanh(pm.math.dot(act_1, weights_1_2))

act_out = pm.math.sigmoid(pm.math.dot(act_2, weights_2_out))

# Binary classification -> Bernoulli likelihood

out = pm.Bernoulli(

"out",

act_out,

observed=ann_output,

total_size=Y_train.shape[0], # IMPORTANT for minibatches

dims="obs_id",

)

return neural_network

neural_network = construct_nn(X_train, Y_train)

That’s not so bad. The Normal priors help regularize the weights. Usually we would add a constant b to the inputs but I omitted it here to keep the code cleaner.

Variational Inference: Scaling model complexity#

We could now just run a MCMC sampler like pymc.NUTS which works pretty well in this case, but was already mentioned, this will become very slow as we scale our model up to deeper architectures with more layers.

Instead, we will use the pymc.ADVI variational inference algorithm. This is much faster and will scale better. Note, that this is a mean-field approximation so we ignore correlations in the posterior.

%%time

with neural_network:

approx = pm.fit(n=30_000)

Finished [100%]: Average Loss = 133.94

CPU times: user 18.7 s, sys: 134 ms, total: 18.8 s

Wall time: 18.9 s

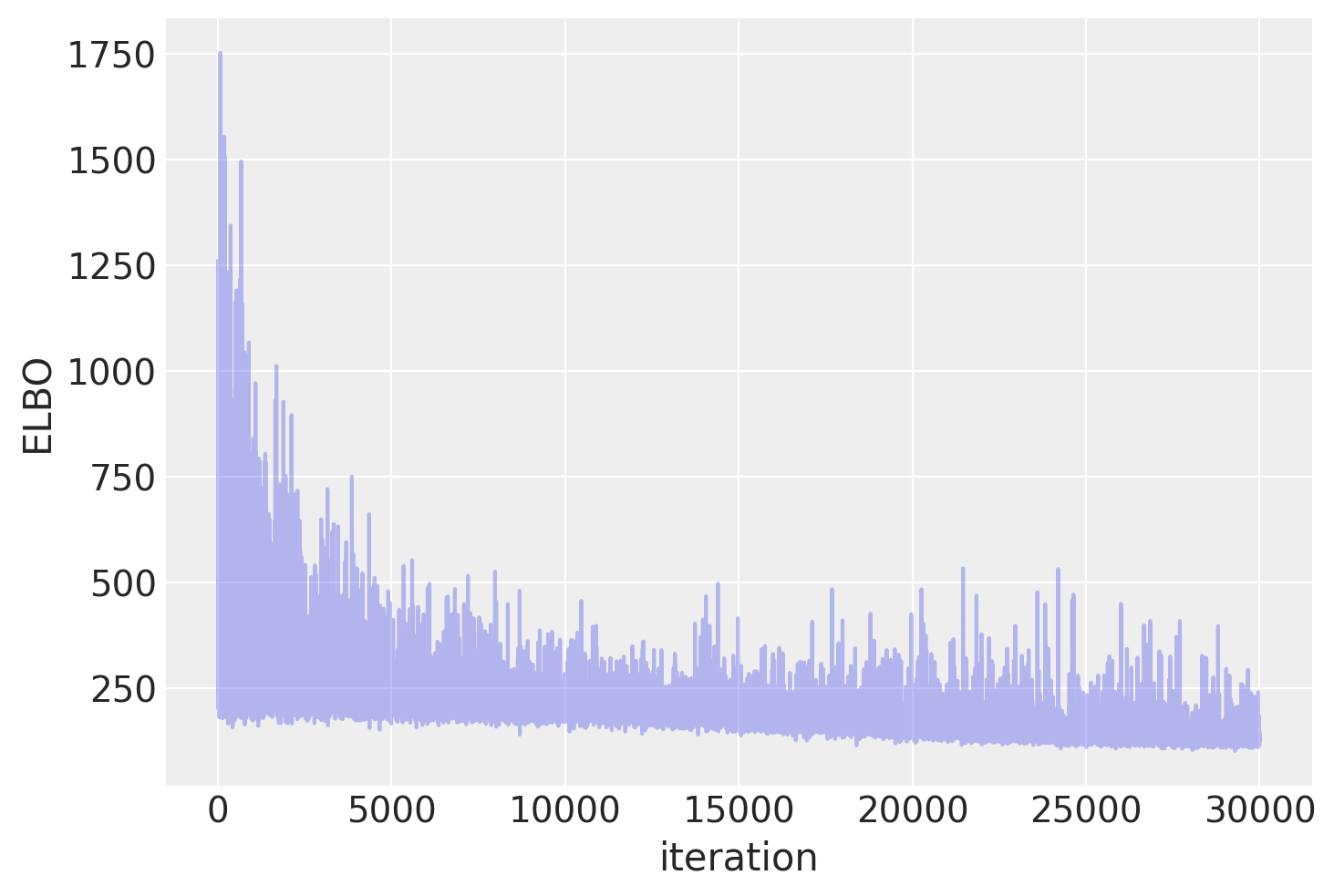

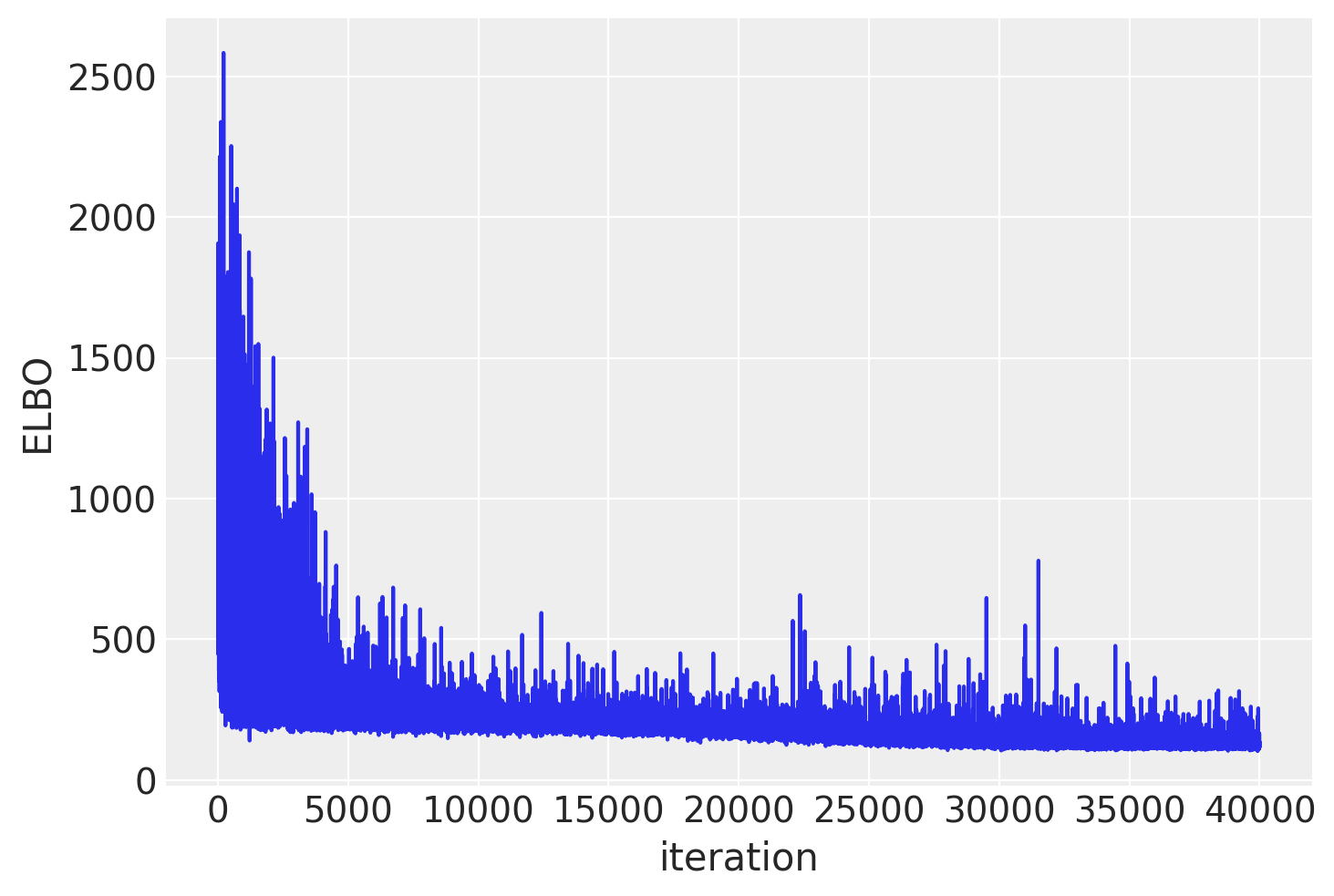

Plotting the objective function (ELBO) we can see that the optimization iteratively improves the fit.

plt.plot(approx.hist, alpha=0.3)

plt.ylabel("ELBO")

plt.xlabel("iteration");

trace = approx.sample(draws=5000)

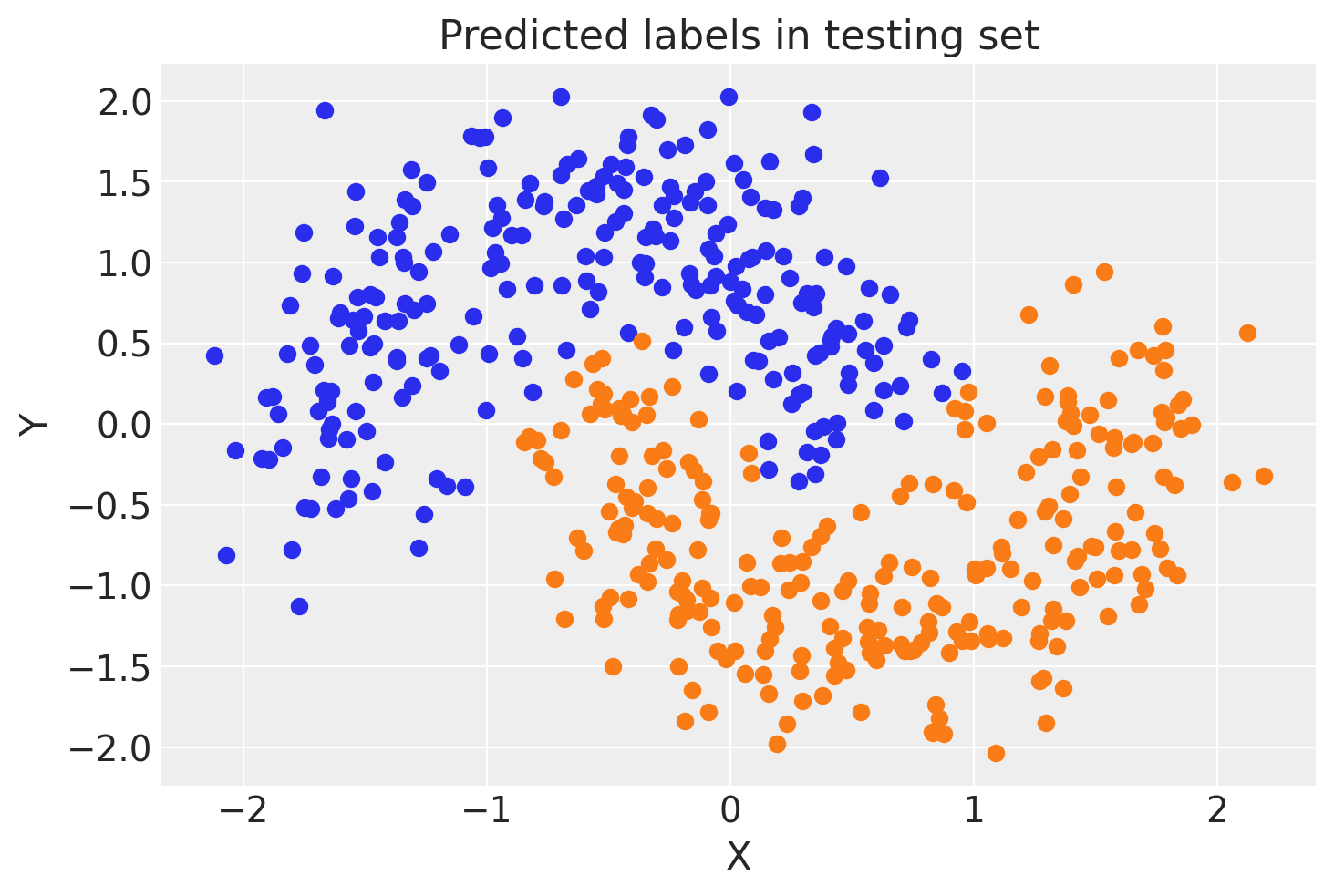

Now that we trained our model, lets predict on the hold-out set using a posterior predictive check (PPC). We can use sample_posterior_predictive() to generate new data (in this case class predictions) from the posterior (sampled from the variational estimation).

with neural_network:

pm.set_data(new_data={"ann_input": X_test})

ppc = pm.sample_posterior_predictive(trace)

trace.extend(ppc)

Sampling: [out]

We can average the predictions for each observation to estimate the underlying probability of class 1.

pred = ppc.posterior_predictive["out"].mean(("chain", "draw")) > 0.5

fig, ax = plt.subplots()

ax.scatter(X_test[pred == 0, 0], X_test[pred == 0, 1], color="C0")

ax.scatter(X_test[pred == 1, 0], X_test[pred == 1, 1], color="C1")

sns.despine()

ax.set(title="Predicted labels in testing set", xlabel="X", ylabel="Y");

print(f"Accuracy = {(Y_test == pred.values).mean() * 100}%")

Accuracy = 95.0%

Hey, our neural network did all right!

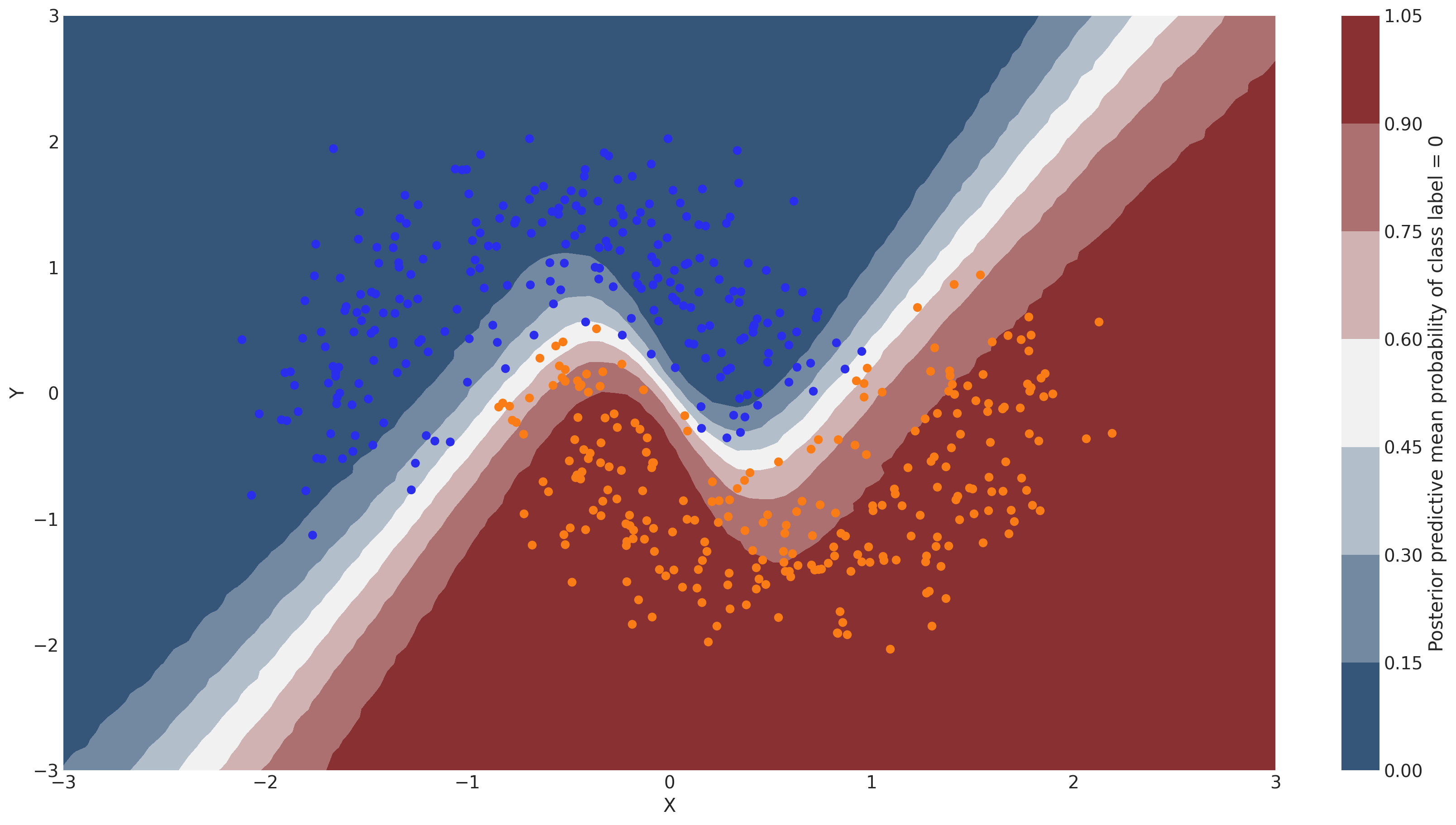

Lets look at what the classifier has learned#

For this, we evaluate the class probability predictions on a grid over the whole input space.

with neural_network:

pm.set_data(new_data={"ann_input": grid_2d, "ann_output": dummy_out})

ppc = pm.sample_posterior_predictive(trace)

/afs/mpa/data/earl/anaconda3/anaconda3/lib/python3.8/site-packages/pymc/model.py:1885: ShapeWarning: You are resizing a variable with dimension 'obs_id' which was initialized as a mutable dimension by another variable ('ann_input'). Remember to update that variable with the correct shape to avoid shape issues.

model.set_data(variable_name, new_value, coords=coords)

Sampling: [out]

y_pred = ppc.posterior_predictive["out"]

Probability surface#

cmap = sns.diverging_palette(250, 12, s=85, l=25, as_cmap=True)

fig, ax = plt.subplots(figsize=(16, 9))

contour = ax.contourf(

grid[0], grid[1], y_pred.mean(("chain", "draw")).values.reshape(100, 100), cmap=cmap

)

ax.scatter(X_test[pred == 0, 0], X_test[pred == 0, 1], color="C0")

ax.scatter(X_test[pred == 1, 0], X_test[pred == 1, 1], color="C1")

cbar = plt.colorbar(contour, ax=ax)

_ = ax.set(xlim=(-3, 3), ylim=(-3, 3), xlabel="X", ylabel="Y")

cbar.ax.set_ylabel("Posterior predictive mean probability of class label = 0");

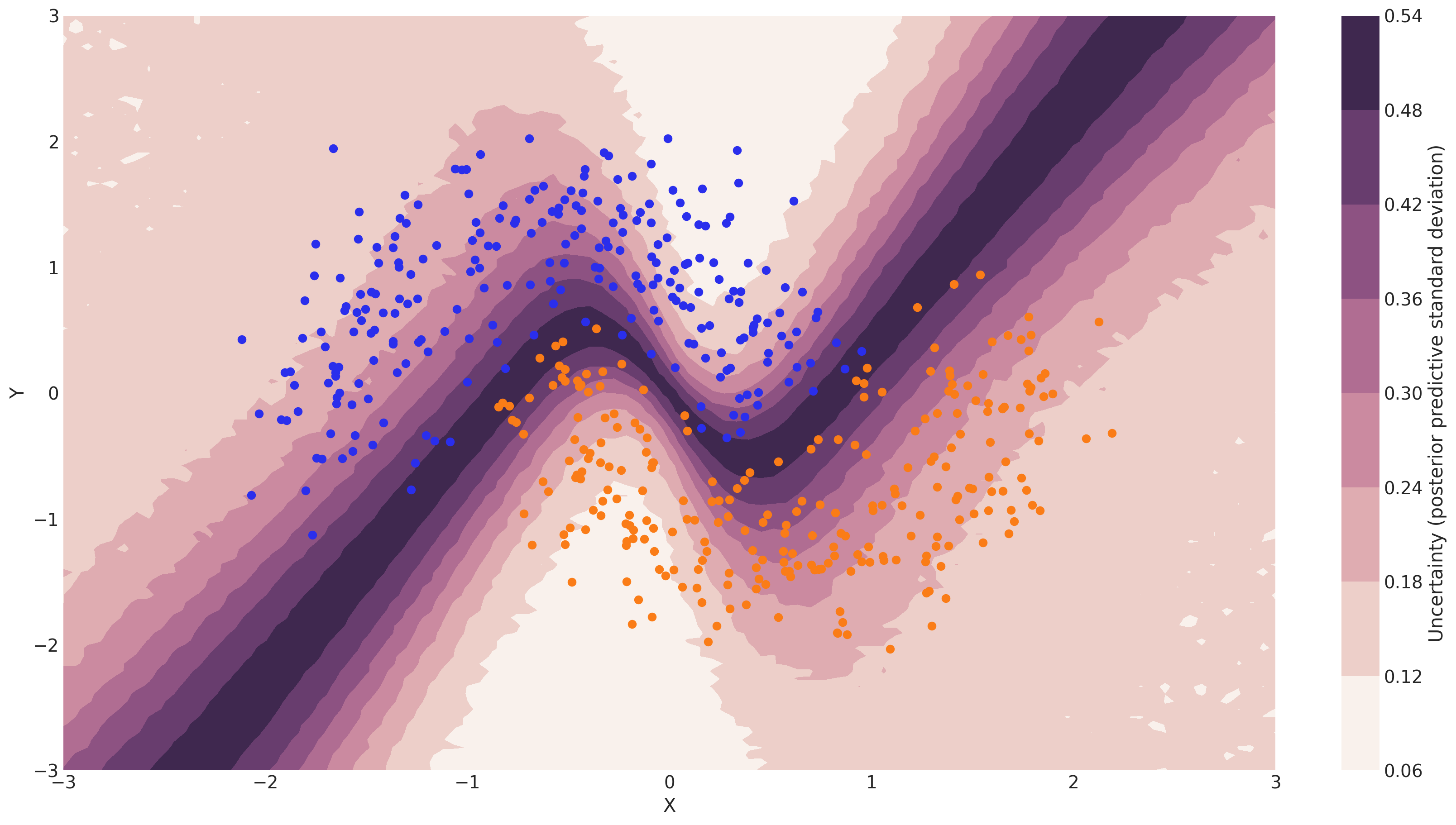

Uncertainty in predicted value#

Note that we could have done everything above with a non-Bayesian Neural Network. The mean of the posterior predictive for each class-label should be identical to maximum likelihood predicted values. However, we can also look at the standard deviation of the posterior predictive to get a sense for the uncertainty in our predictions. Here is what that looks like:

cmap = sns.cubehelix_palette(light=1, as_cmap=True)

fig, ax = plt.subplots(figsize=(16, 9))

contour = ax.contourf(

grid[0], grid[1], y_pred.squeeze().values.std(axis=0).reshape(100, 100), cmap=cmap

)

ax.scatter(X_test[pred == 0, 0], X_test[pred == 0, 1], color="C0")

ax.scatter(X_test[pred == 1, 0], X_test[pred == 1, 1], color="C1")

cbar = plt.colorbar(contour, ax=ax)

_ = ax.set(xlim=(-3, 3), ylim=(-3, 3), xlabel="X", ylabel="Y")

cbar.ax.set_ylabel("Uncertainty (posterior predictive standard deviation)");

We can see that very close to the decision boundary, our uncertainty as to which label to predict is highest. You can imagine that associating predictions with uncertainty is a critical property for many applications like health care. To further maximize accuracy, we might want to train the model primarily on samples from that high-uncertainty region.

Mini-batch ADVI#

So far, we have trained our model on all data at once. Obviously this won’t scale to something like ImageNet. Moreover, training on mini-batches of data (stochastic gradient descent) avoids local minima and can lead to faster convergence.

Fortunately, ADVI can be run on mini-batches as well. It just requires some setting up:

minibatch_x = pm.Minibatch(X_train, batch_size=50)

minibatch_y = pm.Minibatch(Y_train, batch_size=50)

neural_network_minibatch = construct_nn(minibatch_x, minibatch_y)

with neural_network_minibatch:

approx = pm.fit(40000, method=pm.ADVI())

Finished [100%]: Average Loss = 128.11

plt.plot(approx.hist)

plt.ylabel("ELBO")

plt.xlabel("iteration");

As you can see, mini-batch ADVI’s running time is much lower. It also seems to converge faster.

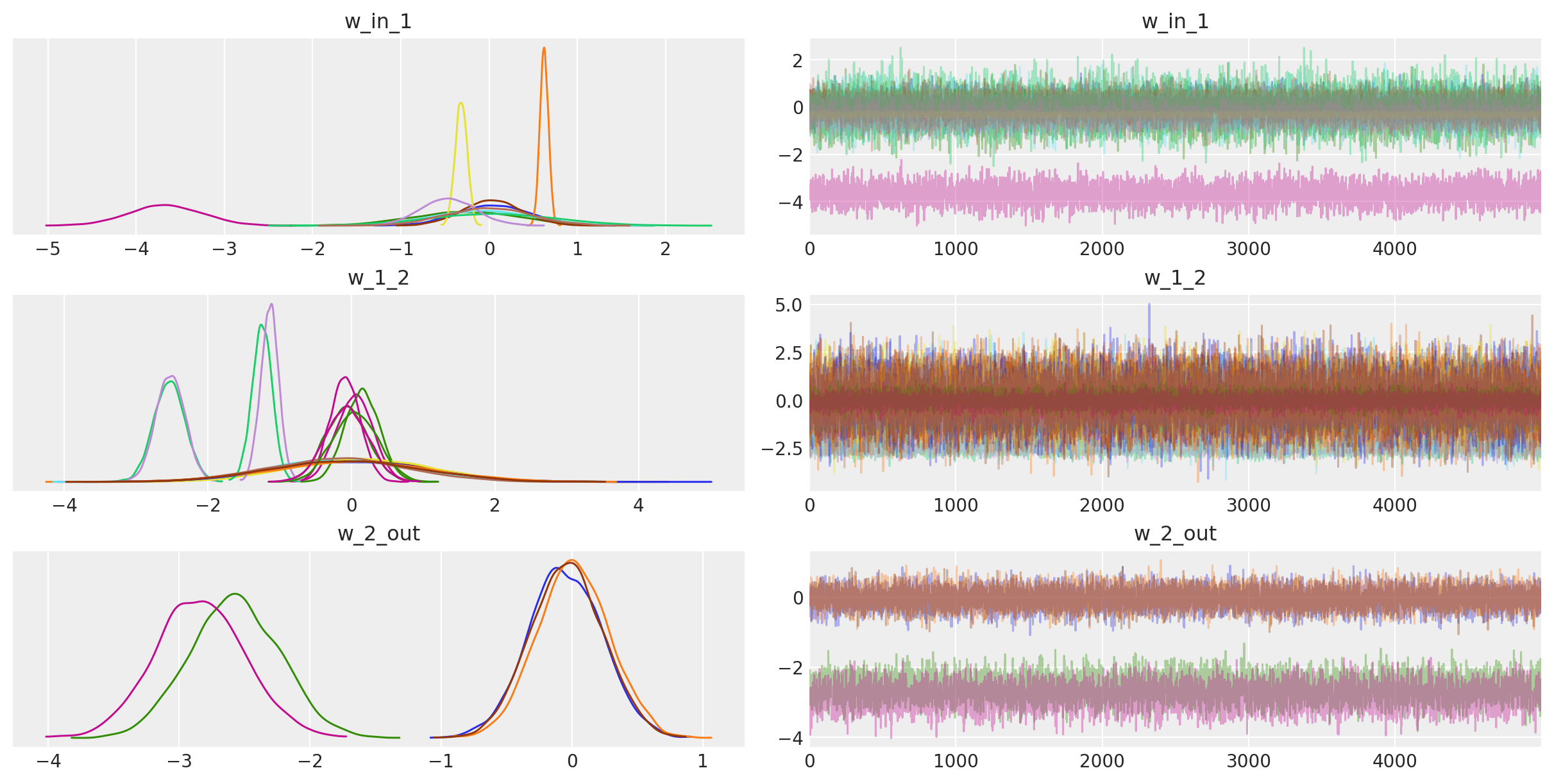

For fun, we can also look at the trace. The point is that we also get uncertainty of our Neural Network weights.

az.plot_trace(trace);

You might argue that the above network isn’t really deep, but note that we could easily extend it to have more layers, including convolutional ones to train on more challenging data sets.

Acknowledgements#

Taku Yoshioka did a lot of work on ADVI in PyMC3, including the mini-batch implementation as well as the sampling from the variational posterior. I’d also like to the thank the Stan guys (specifically Alp Kucukelbir and Daniel Lee) for deriving ADVI and teaching us about it. Thanks also to Chris Fonnesbeck, Andrew Campbell, Taku Yoshioka, and Peadar Coyle for useful comments on an earlier draft.

References#

Alp Kucukelbir, Rajesh Ranganath, Andrew Gelman, and David M. Blei. Automatic variational inference in stan. 2015. arXiv:1506.03431.

Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Alex Graves, Ioannis Antonoglou, Daan Wierstra, and Martin Riedmiller. Playing atari with deep reinforcement learning. 2013. arXiv:1312.5602.

C. Maddison et al. D. Silver, A. Huang. Mastering the game of go with deep neural networks and tree search. Nature, 529:484–489, 2016. URL: https://doi.org/10.1038/nature16961.

Diederik P Kingma and Max Welling. Auto-encoding variational bayes. 2014. arXiv:1312.6114.

Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. Going deeper with convolutions. 2014. arXiv:1409.4842.

Watermark#

%load_ext watermark

%watermark -n -u -v -iv -w -p xarray

Last updated: Wed Jan 25 2023

Python implementation: CPython

Python version : 3.8.16

IPython version : 8.7.0

xarray: 2023.1.0

matplotlib: 3.6.3

pymc : 5.0.2

numpy : 1.22.1

seaborn : 0.11.2

pytensor : 2.9.1

arviz : 0.14.0

Watermark: 2.3.1

License notice#

All the notebooks in this example gallery are provided under the MIT License which allows modification, and redistribution for any use provided the copyright and license notices are preserved.

Citing PyMC examples#

To cite this notebook, use the DOI provided by Zenodo for the pymc-examples repository.

Important

Many notebooks are adapted from other sources: blogs, books… In such cases you should cite the original source as well.

Also remember to cite the relevant libraries used by your code.

Here is an citation template in bibtex:

@incollection{citekey,

author = "<notebook authors, see above>",

title = "<notebook title>",

editor = "PyMC Team",

booktitle = "PyMC examples",

doi = "10.5281/zenodo.5654871"

}

which once rendered could look like: