Probabilistic Matrix Factorization for Making Personalized Recommendations¶

%matplotlib inline

import arviz as az

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import pymc3 as pm

import xarray as xr

print(f"Running on PyMC3 v{pm.__version__}")

Running on PyMC3 v3.11.2

%config InlineBackend.figure_format = 'retina'

RANDOM_SEED = 8927

rng = np.random.default_rng(RANDOM_SEED)

az.style.use("arviz-darkgrid")

Motivation¶

So you are browsing for something to watch on Netflix and just not liking the suggestions. You just know you can do better. All you need to do is collect some ratings data from yourself and friends and build a recommendation algorithm. This notebook will guide you in doing just that!

We’ll start out by getting some intuition for how our model will work. Then we’ll formalize our intuition. Afterwards, we’ll examine the dataset we are going to use. Once we have some notion of what our data looks like, we’ll define some baseline methods for predicting preferences for movies. Following that, we’ll look at Probabilistic Matrix Factorization (PMF), which is a more sophisticated Bayesian method for predicting preferences. Having detailed the PMF model, we’ll use PyMC3 for MAP estimation and MCMC inference. Finally, we’ll compare the results obtained with PMF to those obtained from our baseline methods and discuss the outcome.

Intuition¶

Normally if we want recommendations for something, we try to find people who are similar to us and ask their opinions. If Bob, Alice, and Monty are all similar to me, and they all like crime dramas, I’ll probably like crime dramas. Now this isn’t always true. It depends on what we consider to be “similar”. In order to get the best bang for our buck, we really want to look for people who have the most similar taste. Taste being a complex beast, we’d probably like to break it down into something more understandable. We might try to characterize each movie in terms of various factors. Perhaps films can be moody, light-hearted, cinematic, dialogue-heavy, big-budget, etc. Now imagine we go through IMDB and assign each movie a rating in each of the categories. How moody is it? How much dialogue does it have? What’s its budget? Perhaps we use numbers between 0 and 1 for each category. Intuitively, we might call this the film’s profile.

Now let’s suppose we go back to those 5 movies we rated. At this point, we can get a richer picture of our own preferences by looking at the film profiles of each of the movies we liked and didn’t like. Perhaps we take the averages across the 5 film profiles and call this our ideal type of film. In other words, we have computed some notion of our inherent preferences for various types of movies. Suppose Bob, Alice, and Monty all do the same. Now we can compare our preferences and determine how similar each of us really are. I might find that Bob is the most similar and the other two are still more similar than other people, but not as much as Bob. So I want recommendations from all three people, but when I make my final decision, I’m going to put more weight on Bob’s recommendation than those I get from Alice and Monty.

While the above procedure sounds fairly effective as is, it also reveals an unexpected additional source of information. If we rated a particular movie highly, and we know its film profile, we can compare with the profiles of other movies. If we find one with very close numbers, it is probable we’ll also enjoy this movie. Both this approach and the one above are commonly known as neighborhood approaches. Techniques that leverage both of these approaches simultaneously are often called collaborative filtering [Koren et al., 2009]. The first approach we talked about uses user-user similarity, while the second uses item-item similarity. Ideally, we’d like to use both sources of information. The idea is we have a lot of items available to us, and we’d like to work together with others to filter the list of items down to those we’ll each like best. My list should have the items I’ll like best at the top and those I’ll like least at the bottom. Everyone else wants the same. If I get together with a bunch of other people, we all watch 5 movies, and we have some efficient computational process to determine similarity, we can very quickly order the movies to our liking.

Formalization¶

Let’s take some time to make the intuitive notions we’ve been discussing more concrete. We have a set of \(M\) movies, or items (\(M = 100\) in our example above). We also have \(N\) people, whom we’ll call users of our recommender system. For each item, we’d like to find a \(D\) dimensional factor composition (film profile above) to describe the item. Ideally, we’d like to do this without actually going through and manually labeling all of the movies. Manual labeling would be both slow and error-prone, as different people will likely label movies differently. So we model each movie as a \(D\) dimensional vector, which is its latent factor composition. Furthermore, we expect each user to have some preferences, but without our manual labeling and averaging procedure, we have to rely on the latent factor compositions to learn \(D\) dimensional latent preference vectors for each user. The only thing we get to observe is the \(N \times M\) ratings matrix \(R\) provided by the users. Entry \(R_{ij}\) is the rating user \(i\) gave to item \(j\). Many of these entries may be missing, since most users will not have rated all 100 movies. Our goal is to fill in the missing values with predicted ratings based on the latent variables \(U\) and \(V\). We denote the predicted ratings by \(R_{ij}^*\). We also define an indicator matrix \(I\), with entry \(I_{ij} = 0\) if \(R_{ij}\) is missing and \(I_{ij} = 1\) otherwise.

So we have an \(N \times D\) matrix of user preferences which we’ll call \(U\) and an \(M \times D\) factor composition matrix we’ll call \(V\). We also have a \(N \times M\) rating matrix we’ll call \(R\). We can think of each row \(U_i\) as indications of how much each user prefers each of the \(D\) latent factors. Each row \(V_j\) can be thought of as how much each item can be described by each of the latent factors. In order to make a recommendation, we need a suitable prediction function which maps a user preference vector \(U_i\) and an item latent factor vector \(V_j\) to a predicted ranking. The choice of this prediction function is an important modeling decision, and a variety of prediction functions have been used. Perhaps the most common is the dot product of the two vectors, \(U_i \cdot V_j\) [Koren et al., 2009].

To better understand CF techniques, let us explore a particular example. Imagine we are seeking to recommend movies using a model which infers five latent factors, \(V_j\), for \(j = 1,2,3,4,5\). In reality, the latent factors are often unexplainable in a straightforward manner, and most models make no attempt to understand what information is being captured by each factor. However, for the purposes of explanation, let us assume the five latent factors might end up capturing the film profile we were discussing above. So our five latent factors are: moody, light-hearted, cinematic, dialogue, and budget. Then for a particular user \(i\), imagine we infer a preference vector \(U_i = <0.5, 0.1, 1.5, 1.1, 0.3>\). Also, for a particular item \(j\), we infer these values for the latent factors: \(V_j = <0.5, 1.5, 1.25, 0.8, 0.9>\). Using the dot product as the prediction function, we would calculate 3.425 as the ranking for that item, which is more or less a neutral preference given our 1 to 5 rating scale.

Data¶

The MovieLens 100k dataset [Harper and Konstan, 2016] was collected by the GroupLens Research Project at the University of Minnesota. This data set consists of 100,000 ratings (1-5) from 943 users on 1682 movies. Each user rated at least 20 movies, and be have basic information on the users (age, gender, occupation, zip). Each movie includes basic information like title, release date, video release date, and genre. We will implement a model that is suitable for collaborative filtering on this data and evaluate it in terms of root mean squared error (RMSE) to validate the results.

The data was collected through the MovieLens website during the seven-month period from September 19th, 1997 through April 22nd, 1998. This data has been cleaned up - users who had less than 20 ratings or did not have complete demographic information were removed from this data set.

Let’s begin by exploring our data. We want to get a general feel for what it looks like and a sense for what sort of patterns it might contain. Here are the user rating data:

data_kwargs = dict(sep="\t", names=["userid", "itemid", "rating", "timestamp"])

try:

data = pd.read_csv("../data/ml_100k_u.data", **data_kwargs)

except FileNotFoundError:

data = pd.read_csv(pm.get_data("ml_100k_u.data"), **data_kwargs)

data.head()

| userid | itemid | rating | timestamp | |

|---|---|---|---|---|

| 0 | 196 | 242 | 3 | 881250949 |

| 1 | 186 | 302 | 3 | 891717742 |

| 2 | 22 | 377 | 1 | 878887116 |

| 3 | 244 | 51 | 2 | 880606923 |

| 4 | 166 | 346 | 1 | 886397596 |

And here is the movie detail data:

# fmt: off

movie_columns = ['movie id', 'movie title', 'release date', 'video release date', 'IMDb URL',

'unknown','Action','Adventure', 'Animation',"Children's", 'Comedy', 'Crime',

'Documentary', 'Drama', 'Fantasy', 'Film-Noir', 'Horror', 'Musical', 'Mystery',

'Romance', 'Sci-Fi', 'Thriller', 'War', 'Western']

# fmt: on

item_kwargs = dict(sep="|", names=movie_columns, index_col="movie id", parse_dates=["release date"])

try:

movies = pd.read_csv("../data/ml_100k_u.item", **item_kwargs)

except FileNotFoundError:

movies = pd.read_csv(pm.get_data("ml_100k_u.item"), **item_kwargs)

movies.head()

| movie title | release date | video release date | IMDb URL | unknown | Action | Adventure | Animation | Children's | Comedy | ... | Fantasy | Film-Noir | Horror | Musical | Mystery | Romance | Sci-Fi | Thriller | War | Western | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| movie id | |||||||||||||||||||||

| 1 | Toy Story (1995) | 1995-01-01 | NaN | http://us.imdb.com/M/title-exact?Toy%20Story%2... | 0 | 0 | 0 | 1 | 1 | 1 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | GoldenEye (1995) | 1995-01-01 | NaN | http://us.imdb.com/M/title-exact?GoldenEye%20(... | 0 | 1 | 1 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

| 3 | Four Rooms (1995) | 1995-01-01 | NaN | http://us.imdb.com/M/title-exact?Four%20Rooms%... | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

| 4 | Get Shorty (1995) | 1995-01-01 | NaN | http://us.imdb.com/M/title-exact?Get%20Shorty%... | 0 | 1 | 0 | 0 | 0 | 1 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 | Copycat (1995) | 1995-01-01 | NaN | http://us.imdb.com/M/title-exact?Copycat%20(1995) | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

5 rows × 23 columns

# Plot histogram of ratings

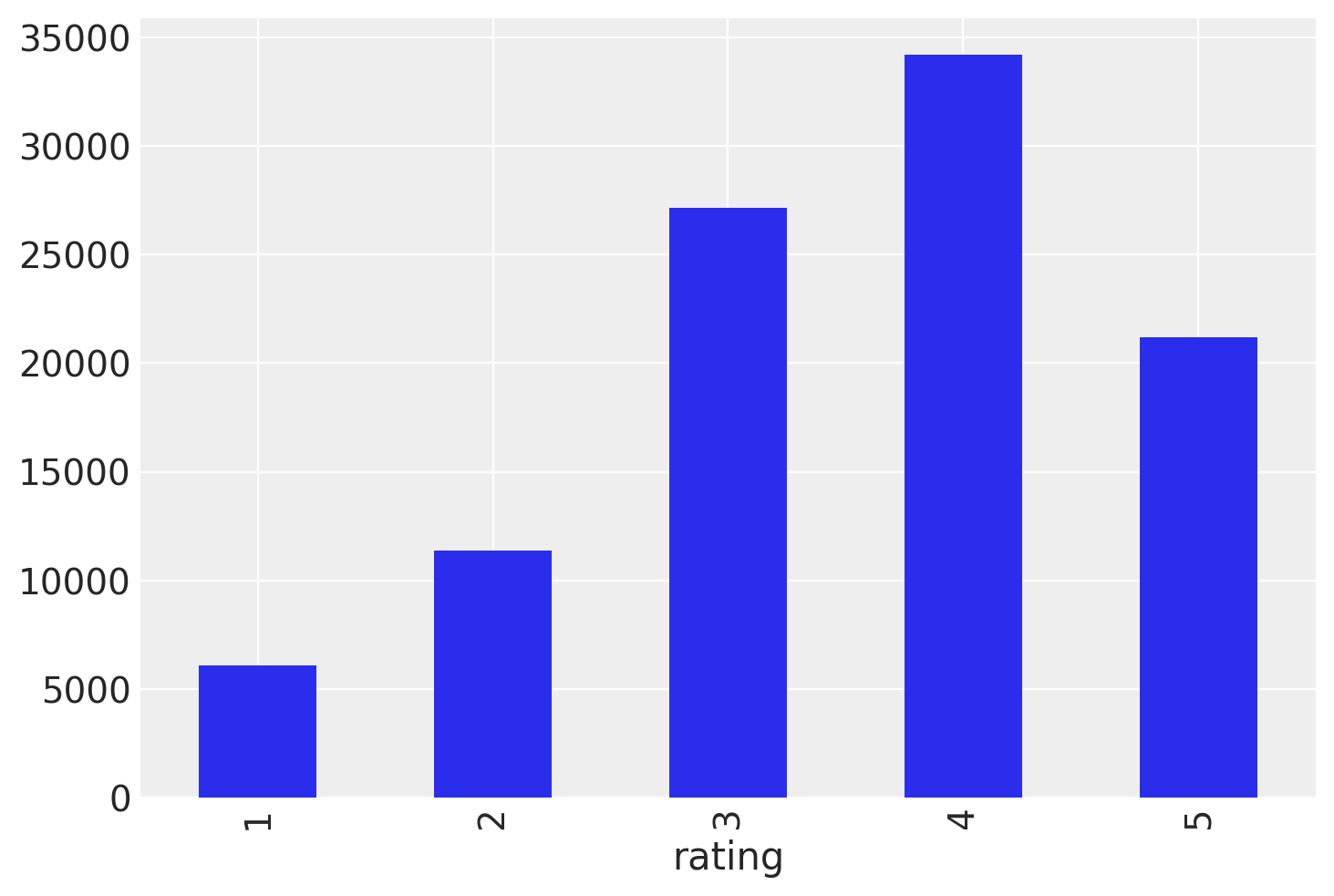

data.groupby("rating").size().plot(kind="bar");

data.rating.describe()

count 100000.000000

mean 3.529860

std 1.125674

min 1.000000

25% 3.000000

50% 4.000000

75% 4.000000

max 5.000000

Name: rating, dtype: float64

This must be a decent batch of movies. From our exploration above, we know most ratings are in the range 3 to 5, and positive ratings are more likely than negative ratings. Let’s look at the means for each movie to see if we have any particularly good (or bad) movie here.

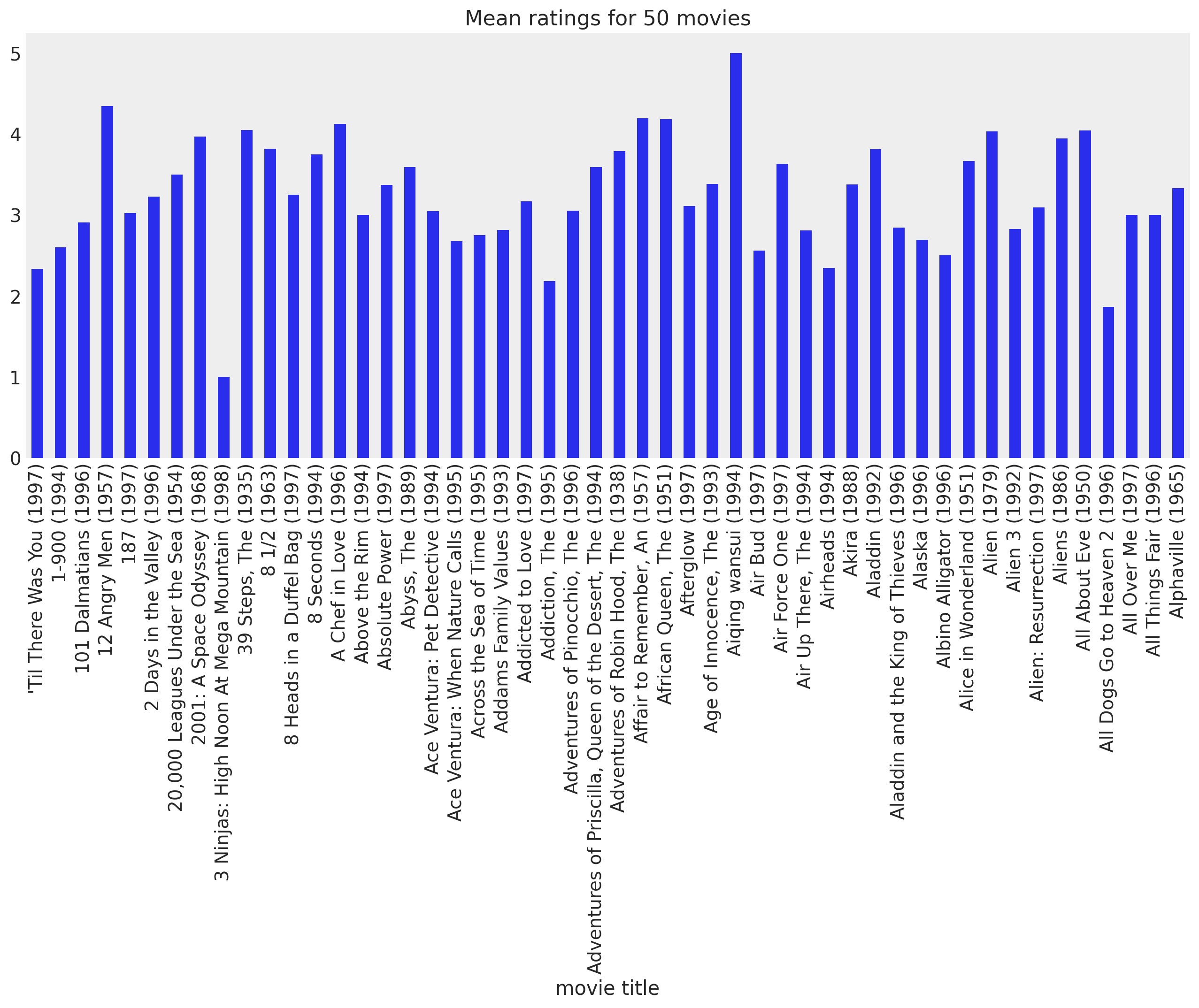

movie_means = data.join(movies["movie title"], on="itemid").groupby("movie title").rating.mean()

movie_means[:50].plot(kind="bar", grid=False, figsize=(16, 6), title="Mean ratings for 50 movies");

/home/ada/miniconda3/envs/pymc3-dev-py38/lib/python3.8/site-packages/IPython/core/events.py:89: UserWarning: constrained_layout not applied. At least one axes collapsed to zero width or height.

func(*args, **kwargs)

/home/ada/miniconda3/envs/pymc3-dev-py38/lib/python3.8/site-packages/IPython/core/pylabtools.py:134: UserWarning: constrained_layout not applied. At least one axes collapsed to zero width or height.

fig.canvas.print_figure(bytes_io, **kw)

While the majority of the movies generally get positive feedback from users, there are definitely a few that stand out as bad. Let’s take a look at the worst and best movies, just for fun:

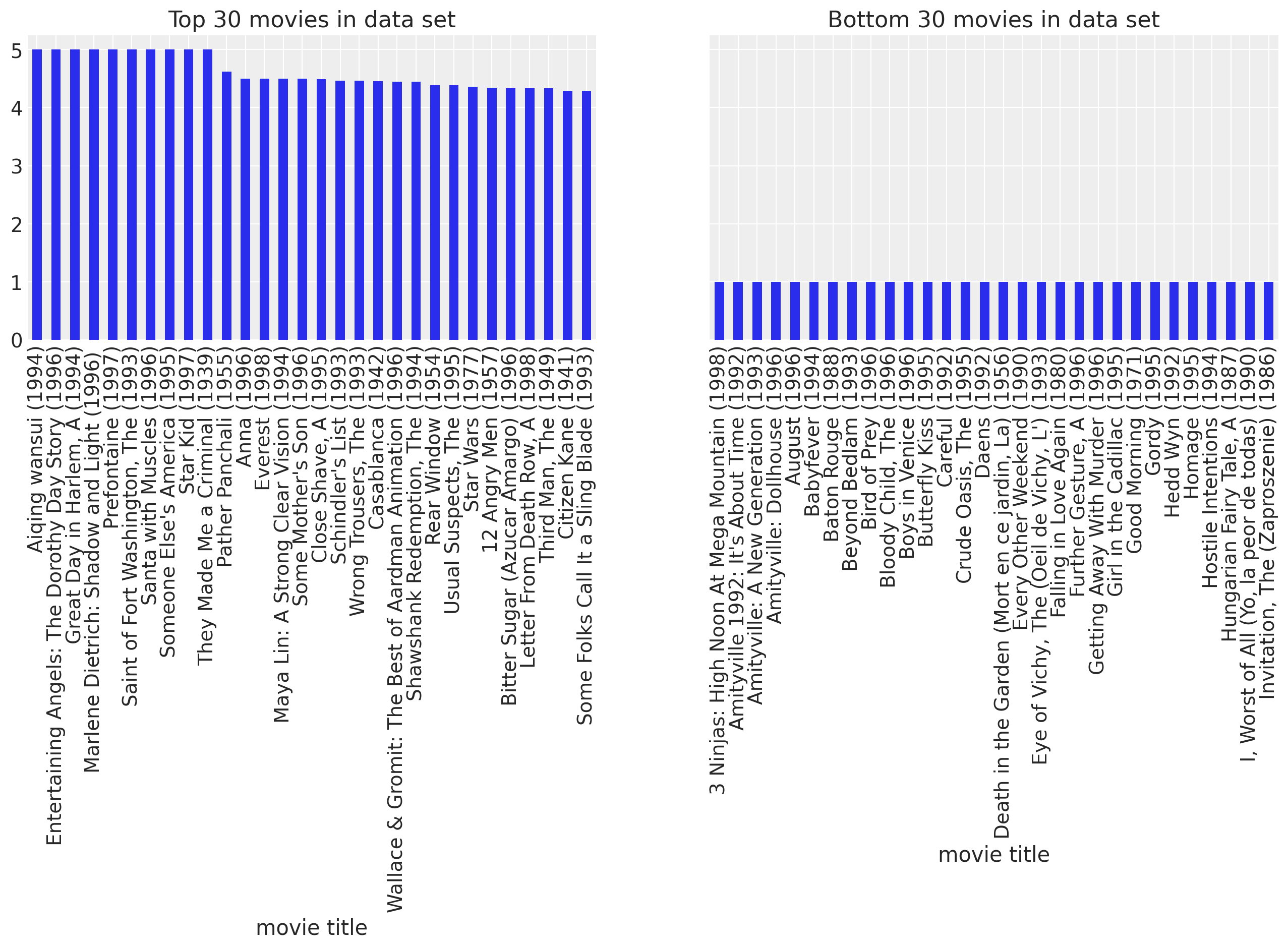

fig, (ax1, ax2) = plt.subplots(ncols=2, figsize=(16, 4), sharey=True)

movie_means.nlargest(30).plot(kind="bar", ax=ax1, title="Top 30 movies in data set")

movie_means.nsmallest(30).plot(kind="bar", ax=ax2, title="Bottom 30 movies in data set");

Make sense to me. We now know there are definite popularity differences between the movies. Some of them are simply better than others, and some are downright lousy. Looking at the movie means allowed us to discover these general trends. Perhaps there are similar trends across users. It might be the case that some users are simply more easily entertained than others. Let’s take a look.

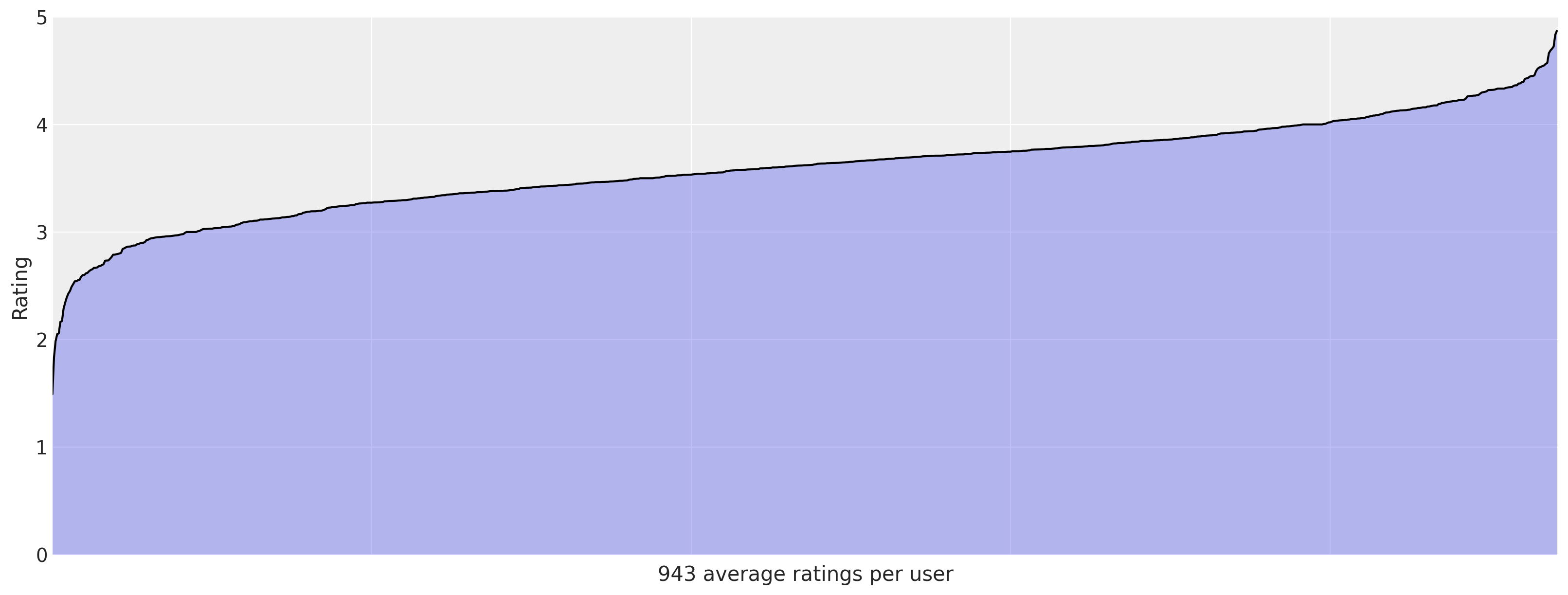

user_means = data.groupby("userid").rating.mean().sort_values()

_, ax = plt.subplots(figsize=(16, 6))

ax.plot(np.arange(len(user_means)), user_means.values, "k-")

ax.fill_between(np.arange(len(user_means)), user_means.values, alpha=0.3)

ax.set_xticklabels("")

# 1000 labels is nonsensical

ax.set_ylabel("Rating")

ax.set_xlabel(f"{len(user_means)} average ratings per user")

ax.set_ylim(0, 5)

ax.set_xlim(0, len(user_means));

We see even more significant trends here. Some users rate nearly everything highly, and some (though not as many) rate nearly everything negatively. These observations will come in handy when considering models to use for predicting user preferences on unseen movies.

Methods¶

Having explored the data, we’re now ready to dig in and start addressing the problem. We want to predict how much each user is going to like all of the movies he or she has not yet read.

Baselines¶

Every good analysis needs some kind of baseline methods to compare against. It’s difficult to claim we’ve produced good results if we have no reference point for what defines “good”. We’ll define three very simple baseline methods and find the RMSE using these methods. Our goal will be to obtain lower RMSE scores with whatever model we produce.

Uniform Random Baseline¶

Our first baseline is about as dead stupid as you can get. Every place we see a missing value in \(R\), we’ll simply fill it with a number drawn uniformly at random in the range [1, 5]. We expect this method to do the worst by far.

Global Mean Baseline¶

This method is only slightly better than the last. Wherever we have a missing value, we’ll fill it in with the mean of all observed ratings.

Mean of Means Baseline¶

Now we’re going to start getting a bit smarter. We imagine some users might be easily amused, and inclined to rate all movies more highly. Other users might be the opposite. Additionally, some movies might simply be more witty than others, so all users might rate some movies more highly than others in general. We can clearly see this in our graph of the movie means above. We’ll attempt to capture these general trends through per-user and per-movie rating means. We’ll also incorporate the global mean to smooth things out a bit. So if we see a missing value in cell \(R_{ij}\), we’ll average the global mean with the mean of \(U_i\) and the mean of \(V_j\) and use that value to fill it in.

# Create a base class with scaffolding for our 3 baselines.

def split_title(title):

"""Change "BaselineMethod" to "Baseline Method"."""

words = []

tmp = [title[0]]

for c in title[1:]:

if c.isupper():

words.append("".join(tmp))

tmp = [c]

else:

tmp.append(c)

words.append("".join(tmp))

return " ".join(words)

class Baseline:

"""Calculate baseline predictions."""

def __init__(self, train_data):

"""Simple heuristic-based transductive learning to fill in missing

values in data matrix."""

self.predict(train_data.copy())

def predict(self, train_data):

raise NotImplementedError("baseline prediction not implemented for base class")

def rmse(self, test_data):

"""Calculate root mean squared error for predictions on test data."""

return rmse(test_data, self.predicted)

def __str__(self):

return split_title(self.__class__.__name__)

# Implement the 3 baselines.

class UniformRandomBaseline(Baseline):

"""Fill missing values with uniform random values."""

def predict(self, train_data):

nan_mask = np.isnan(train_data)

masked_train = np.ma.masked_array(train_data, nan_mask)

pmin, pmax = masked_train.min(), masked_train.max()

N = nan_mask.sum()

train_data[nan_mask] = rng.uniform(pmin, pmax, N)

self.predicted = train_data

class GlobalMeanBaseline(Baseline):

"""Fill in missing values using the global mean."""

def predict(self, train_data):

nan_mask = np.isnan(train_data)

train_data[nan_mask] = train_data[~nan_mask].mean()

self.predicted = train_data

class MeanOfMeansBaseline(Baseline):

"""Fill in missing values using mean of user/item/global means."""

def predict(self, train_data):

nan_mask = np.isnan(train_data)

masked_train = np.ma.masked_array(train_data, nan_mask)

global_mean = masked_train.mean()

user_means = masked_train.mean(axis=1)

item_means = masked_train.mean(axis=0)

self.predicted = train_data.copy()

n, m = train_data.shape

for i in range(n):

for j in range(m):

if np.ma.isMA(item_means[j]):

self.predicted[i, j] = np.mean((global_mean, user_means[i]))

else:

self.predicted[i, j] = np.mean((global_mean, user_means[i], item_means[j]))

baseline_methods = {}

baseline_methods["ur"] = UniformRandomBaseline

baseline_methods["gm"] = GlobalMeanBaseline

baseline_methods["mom"] = MeanOfMeansBaseline

num_users = data.userid.unique().shape[0]

num_items = data.itemid.unique().shape[0]

sparsity = 1 - len(data) / (num_users * num_items)

print(f"Users: {num_users}\nMovies: {num_items}\nSparsity: {sparsity}")

dense_data = data.pivot(index="userid", columns="itemid", values="rating").values

Users: 943

Movies: 1682

Sparsity: 0.9369533063577546

Probabilistic Matrix Factorization¶

Probabilistic Matrix Factorization [Mnih and Salakhutdinov, 2008] is a probabilistic approach to the collaborative filtering problem that takes a Bayesian perspective. The ratings \(R\) are modeled as draws from a Gaussian distribution. The mean for \(R_{ij}\) is \(U_i V_j^T\). The precision \(\alpha\) is a fixed parameter that reflects the uncertainty of the estimations; the normal distribution is commonly reparameterized in terms of precision, which is the inverse of the variance. Complexity is controlled by placing zero-mean spherical Gaussian priors on \(U\) and \(V\). In other words, each row of \(U\) is drawn from a multivariate Gaussian with mean \(\mu = 0\) and precision which is some multiple of the identity matrix \(I\). Those multiples are \(\alpha_U\) for \(U\) and \(\alpha_V\) for \(V\). So our model is defined by:

\(\newcommand\given[1][]{\:#1\vert\:}\)

Given small precision parameters, the priors on \(U\) and \(V\) ensure our latent variables do not grow too far from 0. This prevents overly strong user preferences and item factor compositions from being learned. This is commonly known as complexity control, where the complexity of the model here is measured by the magnitude of the latent variables. Controlling complexity like this helps prevent overfitting, which allows the model to generalize better for unseen data. We must also choose an appropriate \(\alpha\) value for the normal distribution for \(R\). So the challenge becomes choosing appropriate values for \(\alpha_U\), \(\alpha_V\), and \(\alpha\). This challenge can be tackled with the soft weight-sharing methods discussed by Nowlan and Hinton [1992]. However, for the purposes of this analysis, we will stick to using point estimates obtained from our data.

import logging

import time

import scipy as sp

import theano

# Enable on-the-fly graph computations, but ignore

# absence of intermediate test values.

theano.config.compute_test_value = "ignore"

# Set up logging.

logger = logging.getLogger()

logger.setLevel(logging.INFO)

class PMF:

"""Probabilistic Matrix Factorization model using pymc3."""

def __init__(self, train, dim, alpha=2, std=0.01, bounds=(1, 5)):

"""Build the Probabilistic Matrix Factorization model using pymc3.

:param np.ndarray train: The training data to use for learning the model.

:param int dim: Dimensionality of the model; number of latent factors.

:param int alpha: Fixed precision for the likelihood function.

:param float std: Amount of noise to use for model initialization.

:param (tuple of int) bounds: (lower, upper) bound of ratings.

These bounds will simply be used to cap the estimates produced for R.

"""

self.dim = dim

self.alpha = alpha

self.std = np.sqrt(1.0 / alpha)

self.bounds = bounds

self.data = train.copy()

n, m = self.data.shape

# Perform mean value imputation

nan_mask = np.isnan(self.data)

self.data[nan_mask] = self.data[~nan_mask].mean()

# Low precision reflects uncertainty; prevents overfitting.

# Set to the mean variance across users and items.

self.alpha_u = 1 / self.data.var(axis=1).mean()

self.alpha_v = 1 / self.data.var(axis=0).mean()

# Specify the model.

logging.info("building the PMF model")

with pm.Model(

coords={

"users": np.arange(n),

"movies": np.arange(m),

"latent_factors": np.arange(dim),

"obs_id": np.arange(self.data[~nan_mask].shape[0]),

}

) as pmf:

U = pm.MvNormal(

"U",

mu=0,

tau=self.alpha_u * np.eye(dim),

dims=("users", "latent_factors"),

testval=rng.standard_normal(size=(n, dim)) * std,

)

V = pm.MvNormal(

"V",

mu=0,

tau=self.alpha_v * np.eye(dim),

dims=("movies", "latent_factors"),

testval=rng.standard_normal(size=(m, dim)) * std,

)

R = pm.Normal(

"R",

mu=(U @ V.T)[~nan_mask],

tau=self.alpha,

dims="obs_id",

observed=self.data[~nan_mask],

)

logging.info("done building the PMF model")

self.model = pmf

def __str__(self):

return self.name

We’ll also need functions for calculating the MAP and performing sampling on our PMF model. When the observation noise variance \(\alpha\) and the prior variances \(\alpha_U\) and \(\alpha_V\) are all kept fixed, maximizing the log posterior is equivalent to minimizing the sum-of-squared-errors objective function with quadratic regularization terms.

where \(\lambda_U = \alpha_U / \alpha\), \(\lambda_V = \alpha_V / \alpha\), and \(\|\cdot\|_{Fro}^2\) denotes the Frobenius norm [Mnih and Salakhutdinov, 2008]. Minimizing this objective function gives a local minimum, which is essentially a maximum a posteriori (MAP) estimate. While it is possible to use a fast Stochastic Gradient Descent procedure to find this MAP, we’ll be finding it using the utilities built into pymc3. In particular, we’ll use find_MAP with Powell optimization (scipy.optimize.fmin_powell). Having found this MAP estimate, we can use it as our starting point for MCMC sampling.

Since it is a reasonably complex model, we expect the MAP estimation to take some time. So let’s save it after we’ve found it. Note that we define a function for finding the MAP below, assuming it will receive a namespace with some variables in it. Then we attach that function to the PMF class, where it will have such a namespace after initialization. The PMF class is defined in pieces this way so I can say a few things between each piece to make it clearer.

def _find_map(self):

"""Find mode of posterior using L-BFGS-B optimization."""

tstart = time.time()

with self.model:

logging.info("finding PMF MAP using L-BFGS-B optimization...")

self._map = pm.find_MAP(method="L-BFGS-B")

elapsed = int(time.time() - tstart)

logging.info("found PMF MAP in %d seconds" % elapsed)

return self._map

def _map(self):

try:

return self._map

except:

return self.find_map()

# Update our class with the new MAP infrastructure.

PMF.find_map = _find_map

PMF.map = property(_map)

So now our PMF class has a map property which will either be found using Powell optimization or loaded from a previous optimization. Once we have the MAP, we can use it as a starting point for our MCMC sampler. We’ll need a sampling function in order to draw MCMC samples to approximate the posterior distribution of the PMF model.

# Draw MCMC samples.

def _draw_samples(self, **kwargs):

kwargs.setdefault("chains", 1)

with self.model:

self.trace = pm.sample(**kwargs, return_inferencedata=True)

# Update our class with the sampling infrastructure.

PMF.draw_samples = _draw_samples

We could define some kind of default trace property like we did for the MAP, but that would mean using possibly nonsensical values for nsamples and cores. Better to leave it as a non-optional call to draw_samples. Finally, we’ll need a function to make predictions using our inferred values for \(U\) and \(V\). For user \(i\) and movie \(j\), a prediction is generated by drawing from \(\mathcal{N}(U_i V_j^T, \alpha)\). To generate predictions from the sampler, we generate an \(R\) matrix for each \(U\) and \(V\) sampled, then we combine these by averaging over the \(K\) samples.

We’ll want to inspect the individual \(R\) matrices before averaging them for diagnostic purposes. So we’ll write code for the averaging piece during evaluation. The function below simply draws an \(R\) matrix given a \(U\) and \(V\) and the fixed \(\alpha\) stored in the PMF object.

def _predict(self, U, V):

"""Estimate R from the given values of U and V."""

R = np.dot(U, V.T)

sample_R = rng.normal(R, self.std)

# bound ratings

low, high = self.bounds

sample_R[sample_R < low] = low

sample_R[sample_R > high] = high

return sample_R

PMF.predict = _predict

One final thing to note: the dot products in this model are often constrained using a logistic function \(g(x) = 1/(1 + exp(-x))\), that bounds the predictions to the range [0, 1]. To facilitate this bounding, the ratings are also mapped to the range [0, 1] using \(t(x) = (x + min) / range\). The authors of PMF also introduced a constrained version which performs better on users with less ratings [Salakhutdinov and Mnih, 2008]. Both models are generally improvements upon the basic model presented here. However, in the interest of time and space, these will not be implemented here.

Evaluation¶

Metrics¶

In order to understand how effective our models are, we’ll need to be able to evaluate them. We’ll be evaluating in terms of root mean squared error (RMSE), which looks like this:

In this case, the RMSE can be thought of as the standard deviation of our predictions from the actual user preferences.

# Define our evaluation function.

def rmse(test_data, predicted):

"""Calculate root mean squared error.

Ignoring missing values in the test data.

"""

I = ~np.isnan(test_data) # indicator for missing values

N = I.sum() # number of non-missing values

sqerror = abs(test_data - predicted) ** 2 # squared error array

mse = sqerror[I].sum() / N # mean squared error

return np.sqrt(mse) # RMSE

Training Data vs. Test Data¶

The next thing we need to do is split our data into a training set and a test set. Matrix factorization techniques use transductive learning rather than inductive learning. So we produce a test set by taking a random sample of the cells in the full \(N \times M\) data matrix. The values selected as test samples are replaced with nan values in a copy of the original data matrix to produce the training set. Since we’ll be producing random splits, let’s also write out the train/test sets generated. This will allow us to replicate our results. We’d like to be able to idenfity which split is which, so we’ll take a hash of the indices selected for testing and use that to save the data.

# Define a function for splitting train/test data.

def split_train_test(data, percent_test=0.1):

"""Split the data into train/test sets.

:param int percent_test: Percentage of data to use for testing. Default 10.

"""

n, m = data.shape # # users, # movies

N = n * m # # cells in matrix

# Prepare train/test ndarrays.

train = data.copy()

test = np.ones(data.shape) * np.nan

# Draw random sample of training data to use for testing.

tosample = np.where(~np.isnan(train)) # ignore nan values in data

idx_pairs = list(zip(tosample[0], tosample[1])) # tuples of row/col index pairs

test_size = int(len(idx_pairs) * percent_test) # use 10% of data as test set

train_size = len(idx_pairs) - test_size # and remainder for training

indices = np.arange(len(idx_pairs)) # indices of index pairs

sample = rng.choice(indices, replace=False, size=test_size)

# Transfer random sample from train set to test set.

for idx in sample:

idx_pair = idx_pairs[idx]

test[idx_pair] = train[idx_pair] # transfer to test set

train[idx_pair] = np.nan # remove from train set

# Verify everything worked properly

assert train_size == N - np.isnan(train).sum()

assert test_size == N - np.isnan(test).sum()

# Return train set and test set

return train, test

train, test = split_train_test(dense_data)

Results¶

# Let's see the results:

baselines = {}

for name in baseline_methods:

Method = baseline_methods[name]

method = Method(train)

baselines[name] = method.rmse(test)

print("{} RMSE:\t{:.5f}".format(method, baselines[name]))

Uniform Random Baseline RMSE: 1.68490

Global Mean Baseline RMSE: 1.11492

Mean Of Means Baseline RMSE: 1.00750

As expected: the uniform random baseline is the worst by far, the global mean baseline is next best, and the mean of means method is our best baseline. Now let’s see how PMF stacks up.

# We use a fixed precision for the likelihood.

# This reflects uncertainty in the dot product.

# We choose 2 in the footsteps Salakhutdinov

# Mnihof.

ALPHA = 2

# The dimensionality D; the number of latent factors.

# We can adjust this higher to try to capture more subtle

# characteristics of each movie. However, the higher it is,

# the more expensive our inference procedures will be.

# Specifically, we have D(N + M) latent variables. For our

# Movielens dataset, this means we have D(2625), so for 5

# dimensions, we are sampling 13125 latent variables.

DIM = 10

pmf = PMF(train, DIM, ALPHA, std=0.05)

INFO:root:building the PMF model

INFO:filelock:Lock 140065412807360 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140065412807360 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140065412915104 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140065412915104 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140065412914000 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140065412914000 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066232053824 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066232053824 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068208141024 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068208141024 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207590320 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207590320 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207980064 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207980064 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140065412911696 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140065412911696 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068208399168 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068208399168 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068208142368 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068208142368 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068208142608 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068208142608 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068208399840 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068208399840 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140065412912032 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140065412912032 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207549120 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207549120 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207684288 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207684288 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207546672 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207546672 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207741008 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207741008 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207531632 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207531632 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207534032 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207534032 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207153360 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207153360 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207142080 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207142080 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207236768 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207236768 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207238880 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207238880 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206306304 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206306304 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205798496 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205798496 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206110032 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206110032 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205800752 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205800752 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206598896 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206598896 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206597504 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206597504 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205125344 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205125344 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205123952 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205123952 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205801040 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205801040 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206597216 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206597216 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205097552 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205097552 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205733344 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205733344 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068204890048 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068204890048 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068204300224 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068204300224 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068204280368 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068204280368 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:root:done building the PMF model

Predictions Using MAP¶

# Find MAP for PMF.

pmf.find_map();

INFO:root:finding PMF MAP using L-BFGS-B optimization...

INFO:filelock:Lock 140066358242656 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358242656 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358279040 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358279040 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206398000 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206398000 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358310992 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358310992 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358484032 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358484032 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358221456 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358221456 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357796240 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357796240 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068204346384 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068204346384 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068204278592 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068204278592 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205732336 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205732336 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358220208 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358220208 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207257536 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207257536 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357793600 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357793600 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357794752 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

WARNING (theano.tensor.blas): We did not find a dynamic library in the library_dir of the library we use for blas. If you use ATLAS, make sure to compile it with dynamics library.

WARNING:theano.tensor.blas:We did not find a dynamic library in the library_dir of the library we use for blas. If you use ATLAS, make sure to compile it with dynamics library.

INFO:filelock:Lock 140066357794752 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357566864 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357566864 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357795328 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357795328 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357565760 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357565760 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357875520 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357875520 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357731136 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357731136 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068069661424 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068069661424 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357678864 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357678864 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068069691056 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068069691056 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358242560 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358242560 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357730368 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357730368 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068069706192 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068069706192 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060817392 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060817392 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358106768 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358106768 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060666704 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060666704 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068061033328 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068061033328 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060666320 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060666320 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205801232 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068205801232 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060640640 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060640640 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060639776 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060639776 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207255808 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207255808 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068204435440 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068204435440 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060273488 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060273488 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060258800 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060258800 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060802112 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060802112 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357699824 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357699824 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357756784 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357756784 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059274256 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059274256 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059274448 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059274448 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059007824 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059007824 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060489616 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060489616 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059429376 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059429376 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357758368 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066357758368 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058998768 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058998768 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058990720 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058990720 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058990912 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058990912 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059429856 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059429856 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060484992 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060484992 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058599040 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058599040 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058596112 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058596112 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059648160 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059648160 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058754976 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058754976 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058749296 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058749296 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058766016 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058766016 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058198752 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058198752 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059000400 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068059000400 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058201296 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058201296 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058753104 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058753104 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058200528 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058200528 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140065412770928 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140065412770928 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206503056 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206503056 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206188864 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206188864 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206094848 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068206094848 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058646800 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068058646800 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060293200 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060293200 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140065412719952 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140065412719952 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060291904 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060291904 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060630032 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068060630032 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207237968 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068207237968 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056647520 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056647520 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068057051040 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068057051040 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056678800 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056678800 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056647664 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056647664 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056310112 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056310112 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056338384 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056338384 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358242320 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066358242320 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056278160 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056278160 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056444352 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056444352 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056430192 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056430192 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056429664 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056429664 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056312944 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056312944 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056576784 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056576784 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056681968 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056681968 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056186064 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056186064 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056483392 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056483392 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056186592 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056186592 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056723664 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056723664 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056209488 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056209488 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056209104 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068056209104 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068054230208 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068054230208 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:root:found PMF MAP in 84 seconds

Excellent. The first thing we want to do is make sure the MAP estimate we obtained is reasonable. We can do this by computing RMSE on the predicted ratings obtained from the MAP values of \(U\) and \(V\). First we define a function for generating the predicted ratings \(R\) from \(U\) and \(V\). We ensure the actual rating bounds are enforced by setting all values below 1 to 1 and all values above 5 to 5. Finally, we compute RMSE for both the training set and the test set. We expect the test RMSE to be higher. The difference between the two gives some idea of how much we have overfit. Some difference is always expected, but a very low RMSE on the training set with a high RMSE on the test set is a definite sign of overfitting.

def eval_map(pmf_model, train, test):

U = pmf_model.map["U"]

V = pmf_model.map["V"]

# Make predictions and calculate RMSE on train & test sets.

predictions = pmf_model.predict(U, V)

train_rmse = rmse(train, predictions)

test_rmse = rmse(test, predictions)

overfit = test_rmse - train_rmse

# Print report.

print("PMF MAP training RMSE: %.5f" % train_rmse)

print("PMF MAP testing RMSE: %.5f" % test_rmse)

print("Train/test difference: %.5f" % overfit)

return test_rmse

# Add eval function to PMF class.

PMF.eval_map = eval_map

# Evaluate PMF MAP estimates.

pmf_map_rmse = pmf.eval_map(train, test)

pmf_improvement = baselines["mom"] - pmf_map_rmse

print("PMF MAP Improvement: %.5f" % pmf_improvement)

PMF MAP training RMSE: 1.01558

PMF MAP testing RMSE: 1.13147

Train/test difference: 0.11589

PMF MAP Improvement: -0.12397

We actually see a decrease in performance between the MAP estimate and the mean of means performance. We also have a fairly large difference in the RMSE values between the train and the test sets. This indicates that the point estimates for \(\alpha_U\) and \(\alpha_V\) that we calculated from our data are not doing a great job of controlling model complexity.

Let’s see if we can improve our estimates by approximating our posterior distribution with MCMC sampling. We’ll draw 500 samples, with 500 tuning samples.

Predictions using MCMC¶

# Draw MCMC samples.

pmf.draw_samples(draws=500, tune=500)

Auto-assigning NUTS sampler...

INFO:pymc3:Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

INFO:pymc3:Initializing NUTS using jitter+adapt_diag...

INFO:filelock:Lock 140068053792128 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068053792128 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068054996448 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068054996448 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068053317040 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068053317040 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068054377232 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068054377232 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066355112928 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066355112928 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066355111584 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066355111584 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068055168480 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068055168480 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066354538336 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066354538336 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353505280 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353505280 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353109888 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353109888 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353580064 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353580064 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353108688 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353108688 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068055840224 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140068055840224 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353108304 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353108304 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353108112 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353108112 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353161888 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353161888 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066352881088 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066352881088 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353162992 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066353162992 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066352678704 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066352678704 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066352677984 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066352677984 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066352678608 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066352678608 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066352453616 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

INFO:filelock:Lock 140066352453616 released on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock

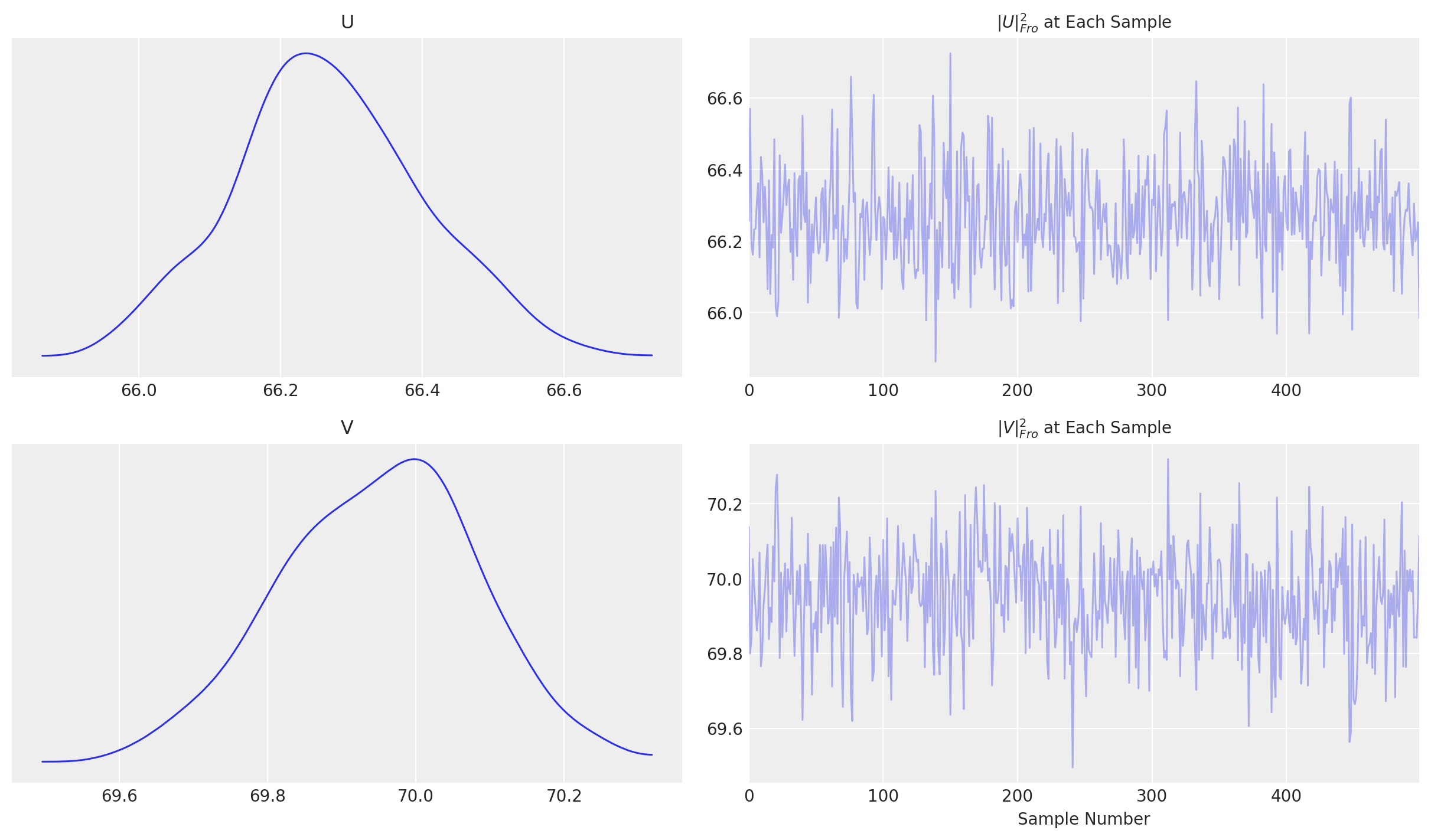

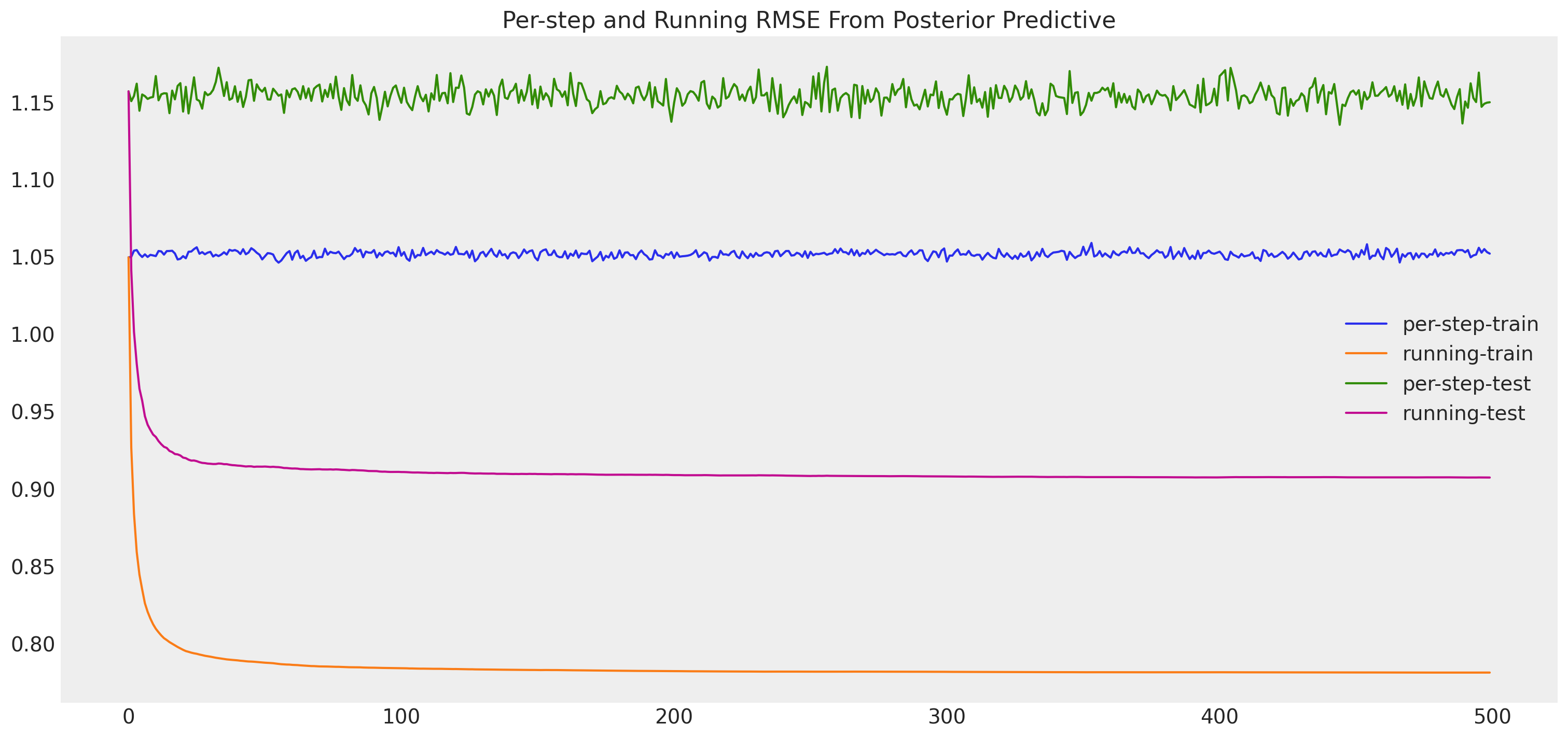

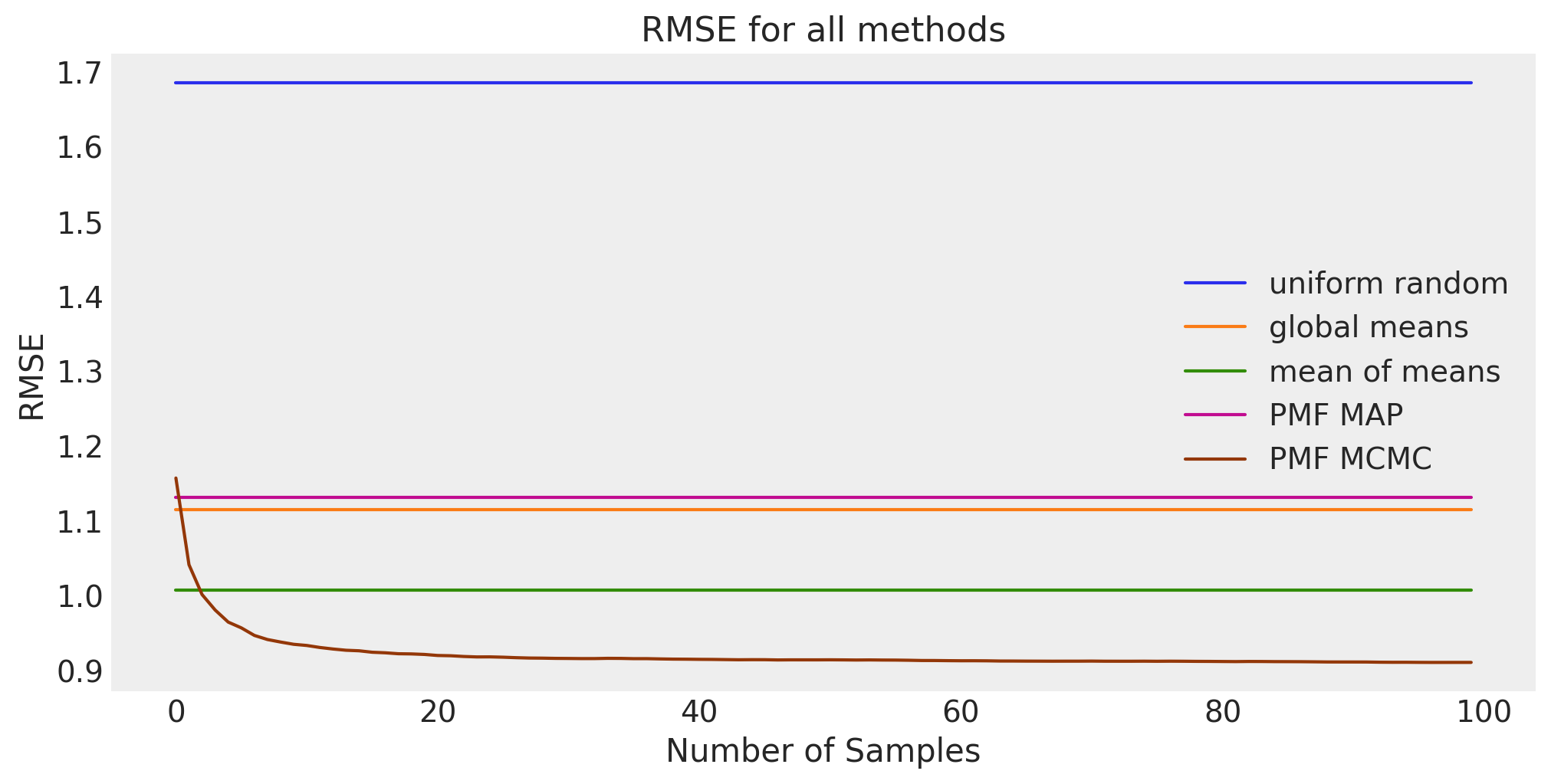

INFO:filelock:Lock 140066353325056 acquired on /home/ada/.theano/compiledir_Linux-5.4--generic-x86_64-with-glibc2.10-x86_64-3.8.10-64/.lock